Data, modeling, and apps (V10.0)¶

April 29, 2024

The DataRobot v10.0.0 release includes many new data, modeling, and apps, as well as admin, features and enhancements, described below. See additional details of Release 10.0 in the MLOps and code-first release announcements.

In the spotlight¶

DataRobot introduces extensible, fully-customizable, cloud-agnostic GenAI capabilities¶

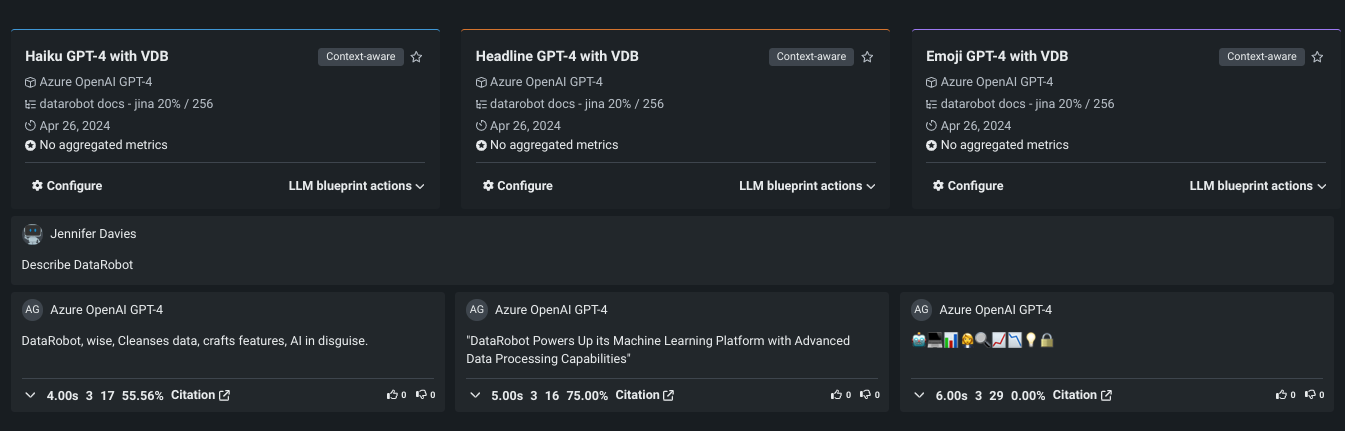

With DataRobot GenAI capabilities, you can generate text content using a variety of pre-trained large language models (LLMs). Additionally, you can tailor the content to your data by building vector databases and leveraging them in the LLM blueprints. The DataRobot GenAI offering builds off of DataRobot's predictive AI experience to provide confidence scores and enable you to bring your favorite libraries, choose your LLMs, and integrate third-party tools. Via a hosted notebook or DataRobot’s UI, embed or deploy AI wherever it will drive value for your business and leverage built-in governance for each asset in the pipeline. Through the DataRobot UI you can:

-

Build vector databases, both in the UI, and for deployed vector databases, with code.

-

Create playgrounds where you can build and compare LLM blueprints.

-

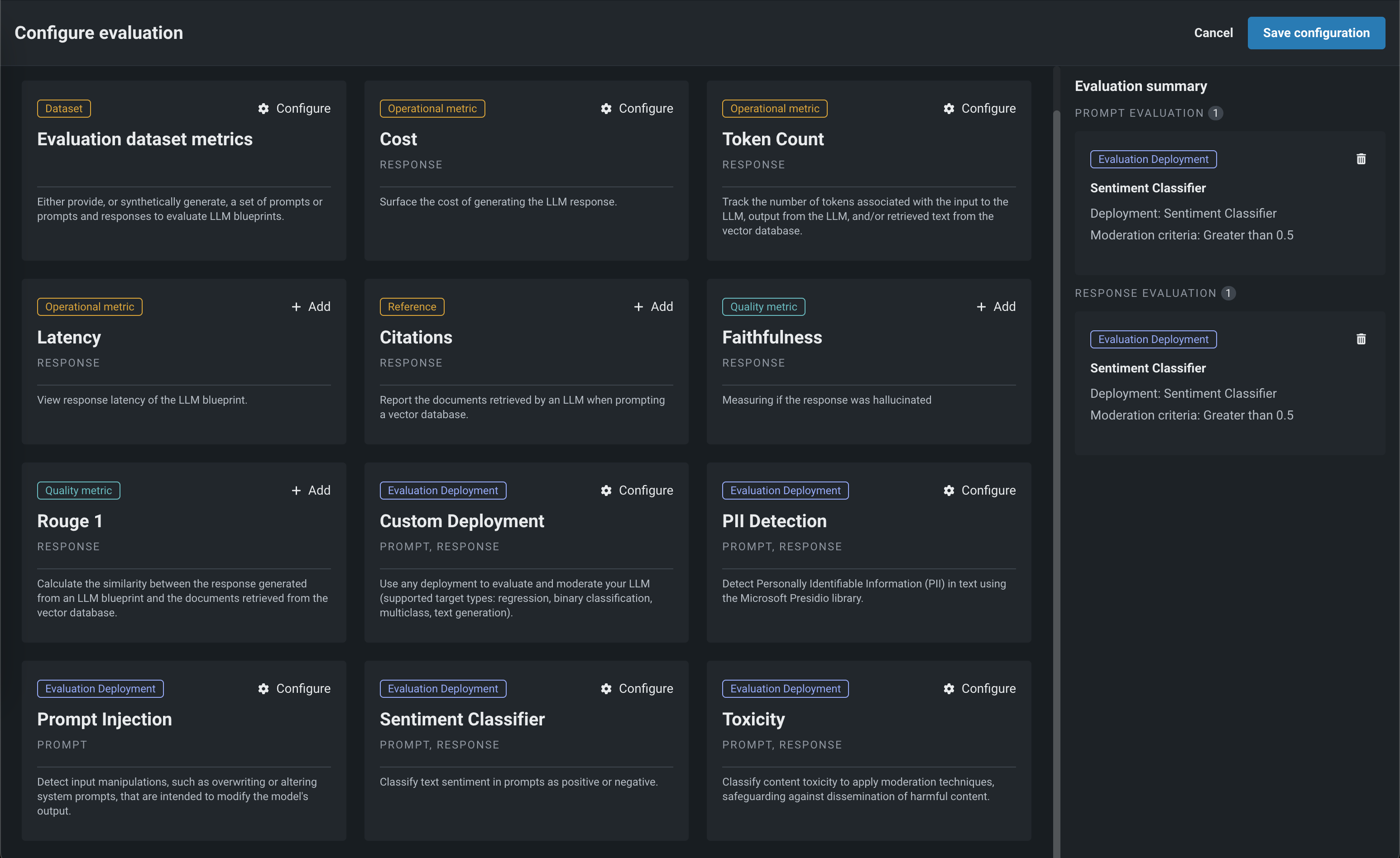

Configure evaluation and moderation guardrails.

And more.

Read an example use case of building an end-to-end generative AI solution at scale.

More docs coming soon!

The generative AI documentation is "continuous deployment." The most complete content can be found on the DataRobot public documentation site.

10.0 release¶

Release v10.0 provides updated UI string translations for the following languages:

- Japanese

- French

- Spanish

- Korean

- Brazilian Portuguese

Features grouped by capability

See these important deprecation announcements for information about changes to DataRobot's support for older, expiring functionality. This document also describes DataRobot's fixed issues.

Data enhancements¶

GA¶

Native Databricks connector added¶

The native Databricks connector, which lets you access data in Databricks on Azure or AWS, is now generally available in DataRobot. In addition to performance enhancements, the new connector also allows you to:

- Create and configure data connections.

- Authenticate a connection via service principal as well as sharing service principal credentials through secure configurations.

- Add Databricks datasets to a Use Case.

- Wrangle Databricks datasets, and then publish recipes to Databricks to materialize the output in the Data Registry.

- Use the public python API client to access data via the Databricks connector.

Native AWS S3 connector added¶

The new AWS S3 connector is now generally available in DataRobot. In addition to performance enhancements, this connector also enables support for AWS S3 in Workbench, allowing you to:

- Create and configure data connections.

- Add AWS S3 datasets to a Use Case.

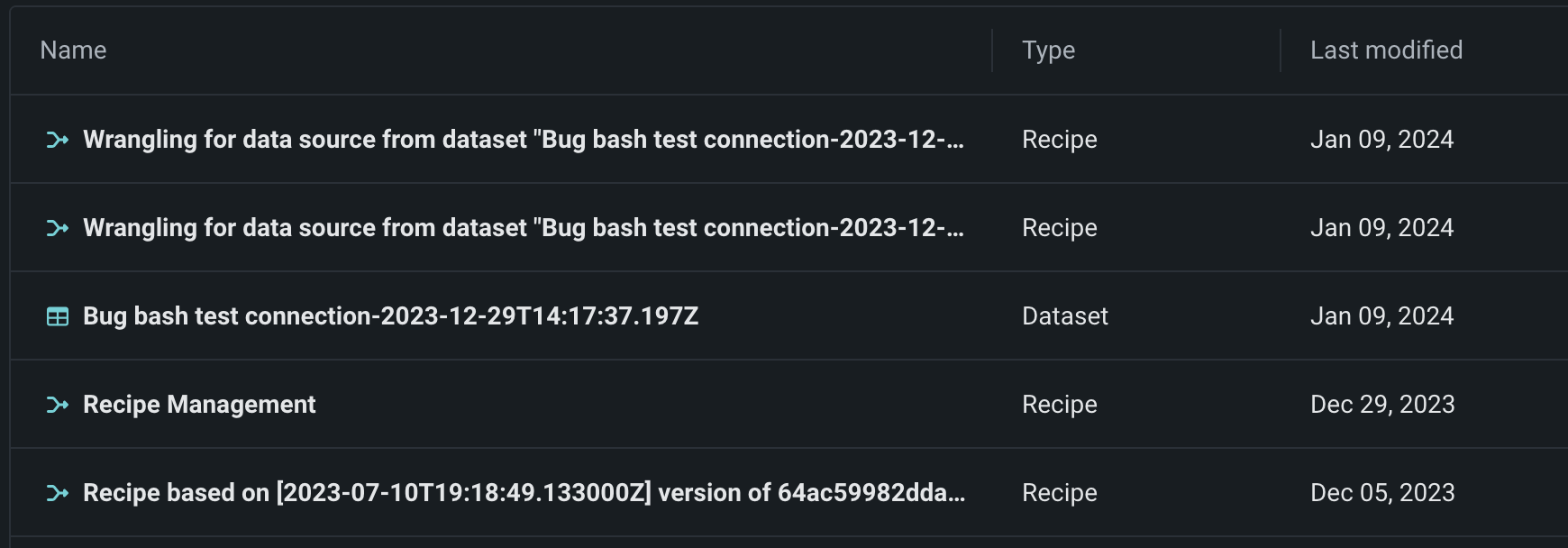

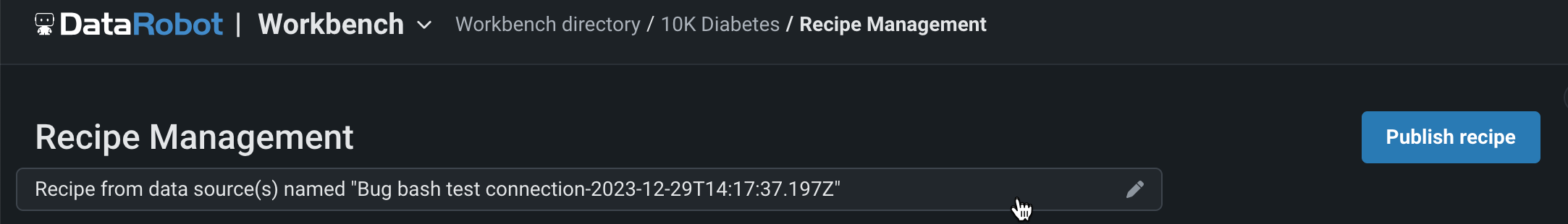

Improved recipe management in Workbench¶

This release introduces the following enhancements when wrangling data in Workbench:

- When you wrangle a dataset in your Use Case, including re-wrangling the same dataset, DataRobot creates and saves a copy of the recipe in the Data tab regardless of whether or not you add operations to it. Then, each time you modify the recipe, your changes are automatically saved. Additionally, you can open saved recipes to continue making changes.

- In a Use Case, the Datasets tab has been replaced by the Data tab, which now lists both datasets and recipes. New icons have been also been added to the Data tab to quickly distinguish between datasets and recipes.

- During a wrangling session, add a helpful name and description to your recipe for context when re-wrangling a recipe in the future.

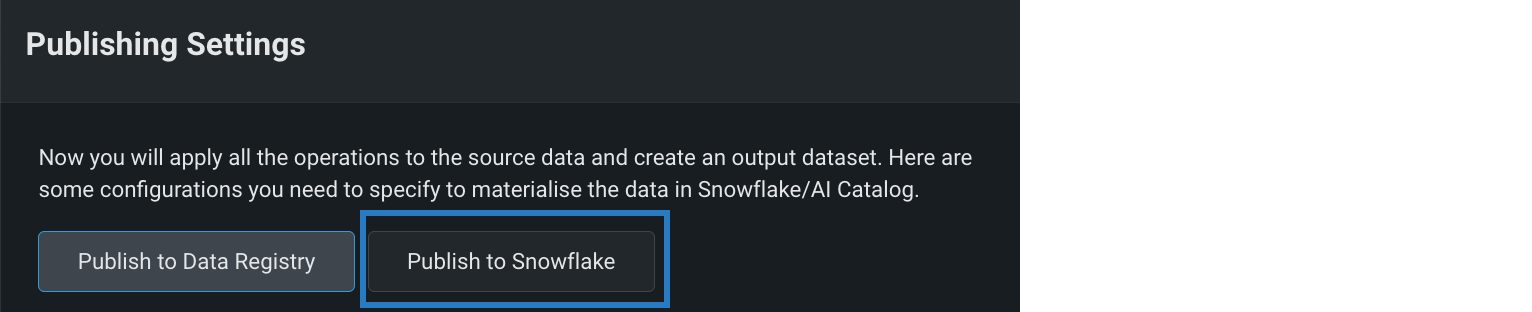

Enable in-source materialization for wrangled BigQuery and Snowflake datasets¶

In-source materialization is now generally available when wrangling BigQuery and Snowflake datasets. In Publishing Settings, click either Publish to BigQuery or Publish to Snowflake depending on your data source. Selecting this option materializes an output dynamic dataset in the Data Registry as well as your data source. This allows you to leverage the security, compliance, and financial controls specified within its environment.

All wrangling operations generally available¶

The Join and Aggregate wrangling operations, previously available for preview, are now generally available in Workbench.

Support for Parquet file ingestion¶

Ingestion of Parquet files is now GA for the AI Catalog, training datasets, and predictions datasets. The following Parquet file types are supported:

- Single Parquet files

- Single zipped Parquet files

- Multiple Parquet files (registered as separate datasets)

- Zipped multi-Parquet file (merged to create a single dataset in DataRobot)

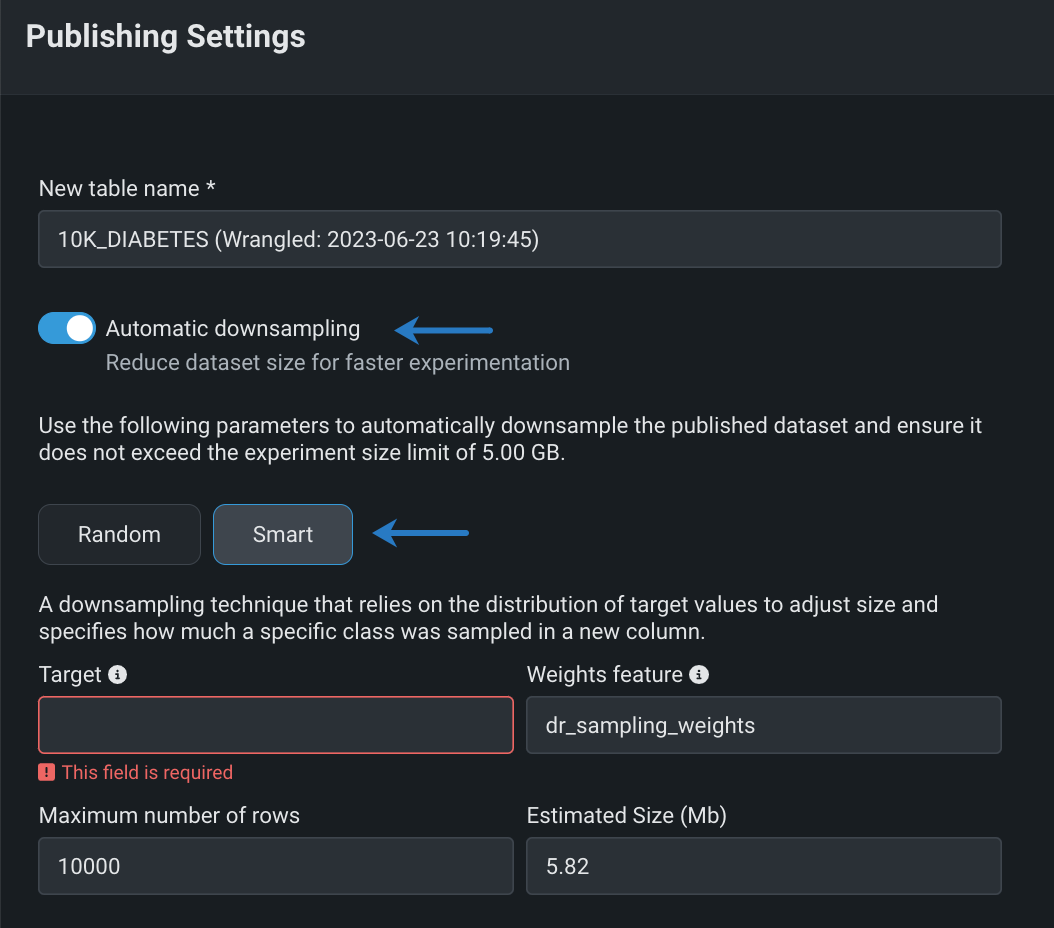

Publish wrangling recipes with smart downsampling¶

After building a wrangling recipe in Workbench, enable smart downsampling in the publishing settings to reduce the size of your output dataset and optimize model training. Smart downsampling is a data science technique that reduces the time it takes to fit a model without sacrificing accuracy, as well as account for class imbalance by stratifying the sample by class.

Preview¶

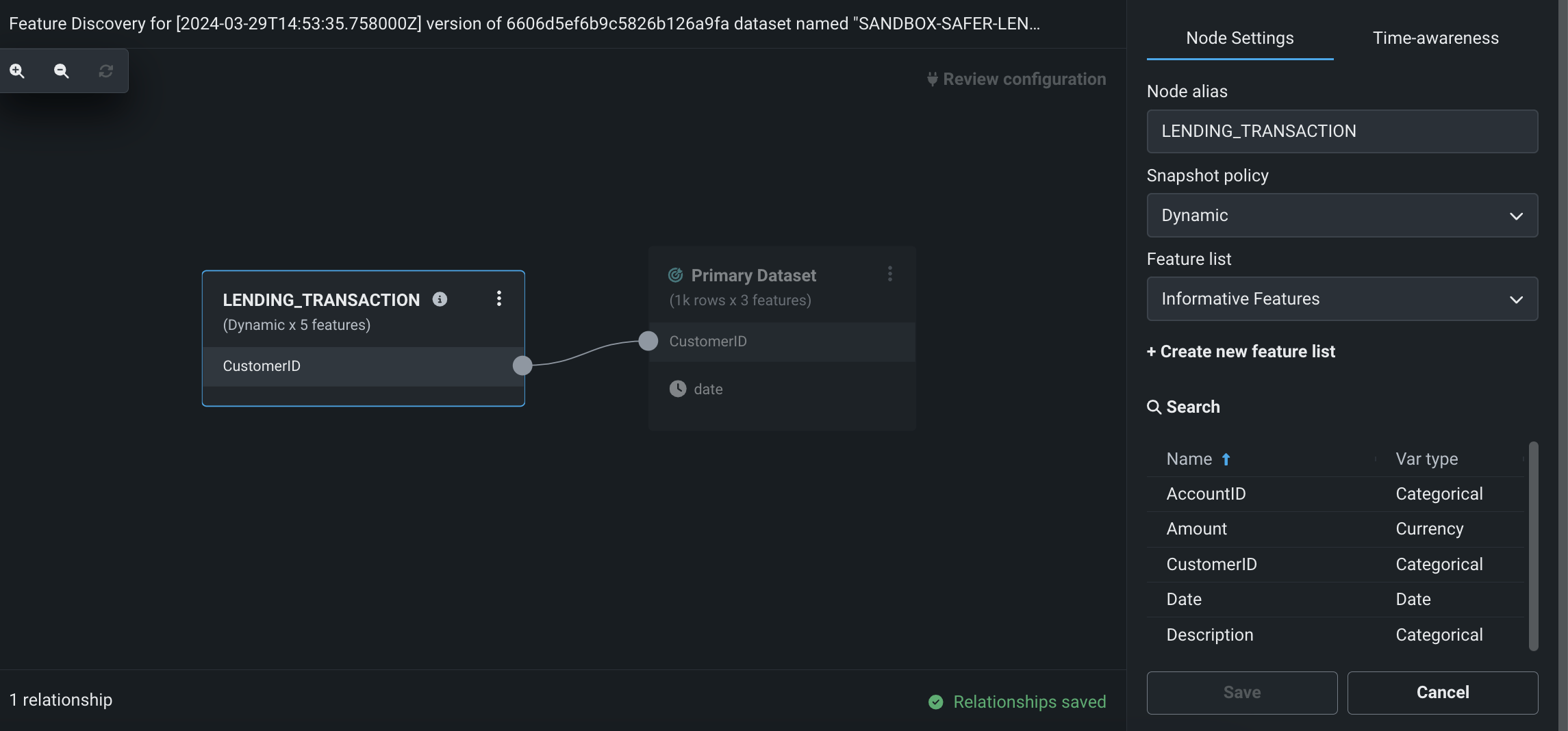

Perform Feature Discovery in Workbench¶

Now available for preview, perform Feature Discovery in Workbench to discover and generate new features from multiple datasets. You can initiate Feature Discovery in two places:

- The Data tab, to the right of the dataset that will serve as the primary dataset, click the more options icon > Feature Discovery.

- The data explore page of a specific dataset, click Data actions > Start feature discovery.

On this page, you can add secondary datasets and configure relationships between the datasets.

Publishing a Feature Discovery recipe instructs DataRobot to perform the specific joins and aggregations, generating a new output dataset that is then registered in the Data Registry and added to your current Use Case.

Preview documentation.

Feature flag(s) ON by default: Enable Feature Discovery in Workbench

Data improvements added to Workbench¶

This release introduces the following enhancements, available for preview, when working with data in Workbench:

- The data explore page now supports dataset versioning, as well as the ability to rename and download datasets.

- The feature list dropdown is now a separate tab on the data explore page.

- Autocomplete functionality has been improved for the Compute New Feature operation.

- You can now use dynamic datasets to set up an experiment.

Feature flag(s) OFF by default: Enable Enhanced Data Explore View

Improved recipe management in Workbench¶

Now available for preview, when you wrangle a dataset in your Use Case, including re-wrangling the same dataset, DataRobot creates and saves a copy of the recipe in the Data tab regardless of whether or not you add operations to it. Then, each time you modify the recipe, your changes are automatically saved. Additionally, you can open saved recipes to continue making changes.

New icons have been added to the Data tab to quickly distinguish between datasets and recipes.

During a wrangling session, add a helpful name and description to your recipe for context when re-wrangling a recipe in the future.

Preview documentation.

Feature flag OFF by default: Enable Recipe Management in Workbench

ADLS Gen2 connector added to DataRobot¶

Support for the ADLS Gen2 native connector has been added to both DataRobot Classic and Workbench, allowing you to:

- Create and configure data connections.

- Add ADLS Gen2 datasets.

Preview documentation.

Feature flag ON by default: Enable ADLS Gen2 Connector

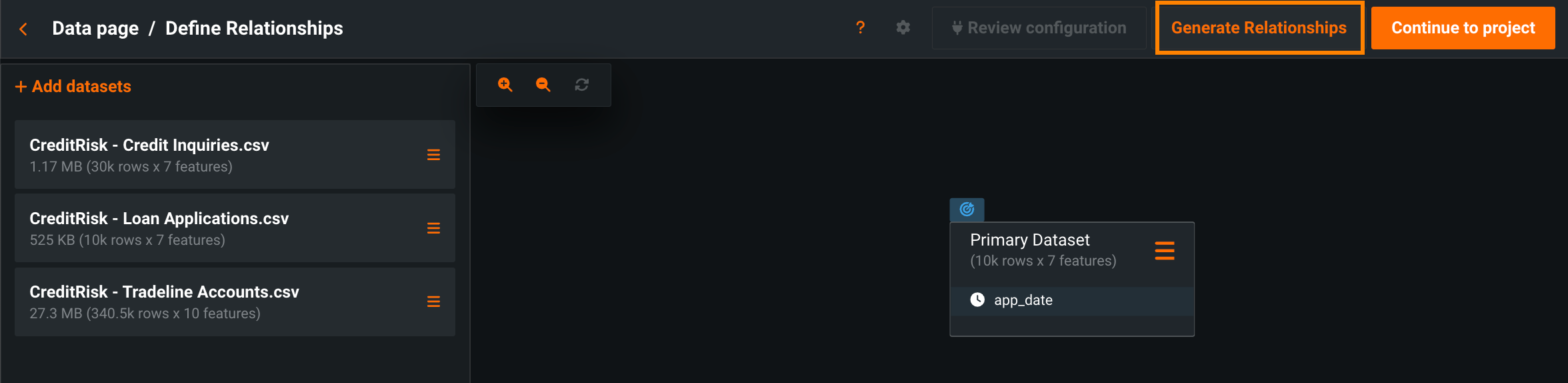

Automatically generate relationships for Feature Discovery¶

Now available for public preview, DataRobot can automatically detect and generate relationships between datasets in Feature Discovery projects, allowing you to quickly explore potential relationships when you’re unsure of how they connect. To automatically generate relationships, make sure all secondary datasets are added to your project, and then click Generate Relationships at the top of the Define Relationships page.

Preview documentation.

Feature flag OFF by default: Enable Feature Discovery Relationship Detection

Modeling features¶

GA¶

Workbench adds two partitioning methods for predictive projects¶

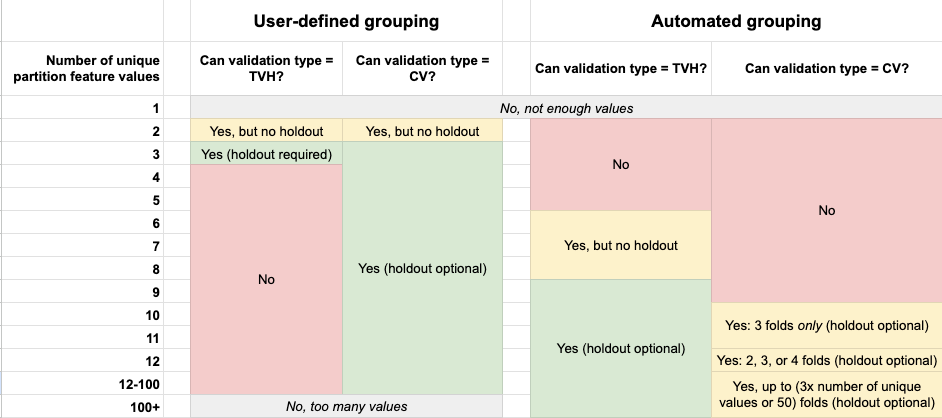

Now GA, Workbench now supports user-defined grouping (“column-based” or “partition feature” in Classic) and automated grouping (“group partitioning” in classic). While less common, user-defined and automated group partitioning provide a method for partitioning by partition feature—a feature from the dataset that is the basis of grouping. To use grouping, select which method based on the cardinality of the partition feature. Once selected, choose a validation type; see the documentation for assistance in selecting the appropriate validation type and more details about using grouping for partitioning.

Sliced insights for time-aware experiments now GA in DataRobot Classic¶

With this deployment, sliced insights for OTV and time series projects are now generally available for Lift Chart, ROC Curve, Feature Effects, and Feature Impact in DataRobot Classic. Sliced insights provide the option to view a subpopulation of a model's derived data based on feature values. Use the segment-based accuracy information gleaned from sliced insights, or compare the segments to the "global" slice (all data), to improve training data, create individual models per segment, or augment predictions post-deployment.

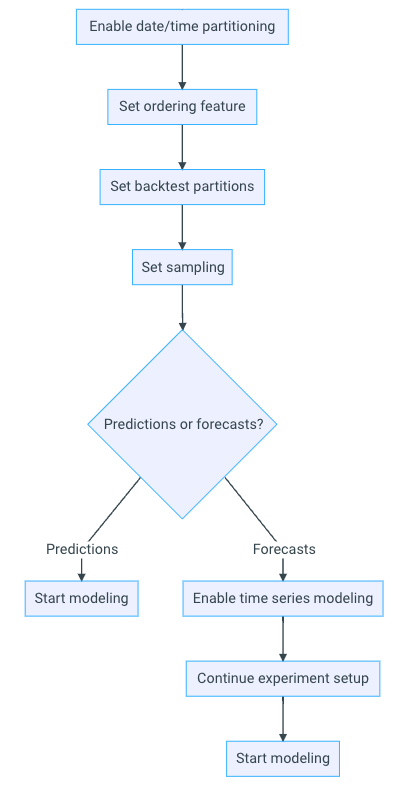

Date/time partitioning for time-aware experiments now GA¶

The ability to create time-aware experiments—either predictive or forecasting with time series—is now generally available. With a simplified workflow that shares much of the setup for row-by-row predictions and forecasting, clearer backtest modification tools, and the ability to reset changes before building, you can now quickly and easily work with time-relevant data.

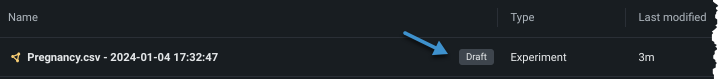

Save Workbench experiment progress prior to modeling¶

When creating Workbench experiments, you can now save your progress as a draft, navigate away from the experiment setup page, and return to the setup later. Draft status is indicated in the Use Case’s home page.

Uploading, modeling, and generating insights on 10GB for OTV experiments¶

To improve the scalability and user experience for OTV (out-of-time validation) experiments, this deployment introduces scaling for larger datasets. When using out-of-time validation as the partitioning method, DataRobot no longer needs to downsample for datasets as large as 10GB. Instead, a multistage Autopilot process supports the greatly expanded input allowance.

Preview¶

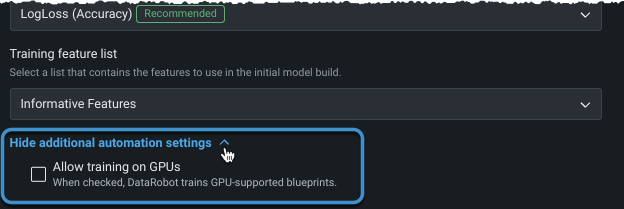

GPU support now available in Workbench¶

In additional automation settings, you can now enable GPU workers for Workbench experiments that include text and/or images and require deep learning models. Training on GPUs speeds up training time. DataRobot detects blueprints that contain certain tasks and, when detected, includes GPU-supported blueprints both in Autopilot and in the blueprint repository.

Preview documentation.

Feature flag OFF by default: Enable GPU Workers

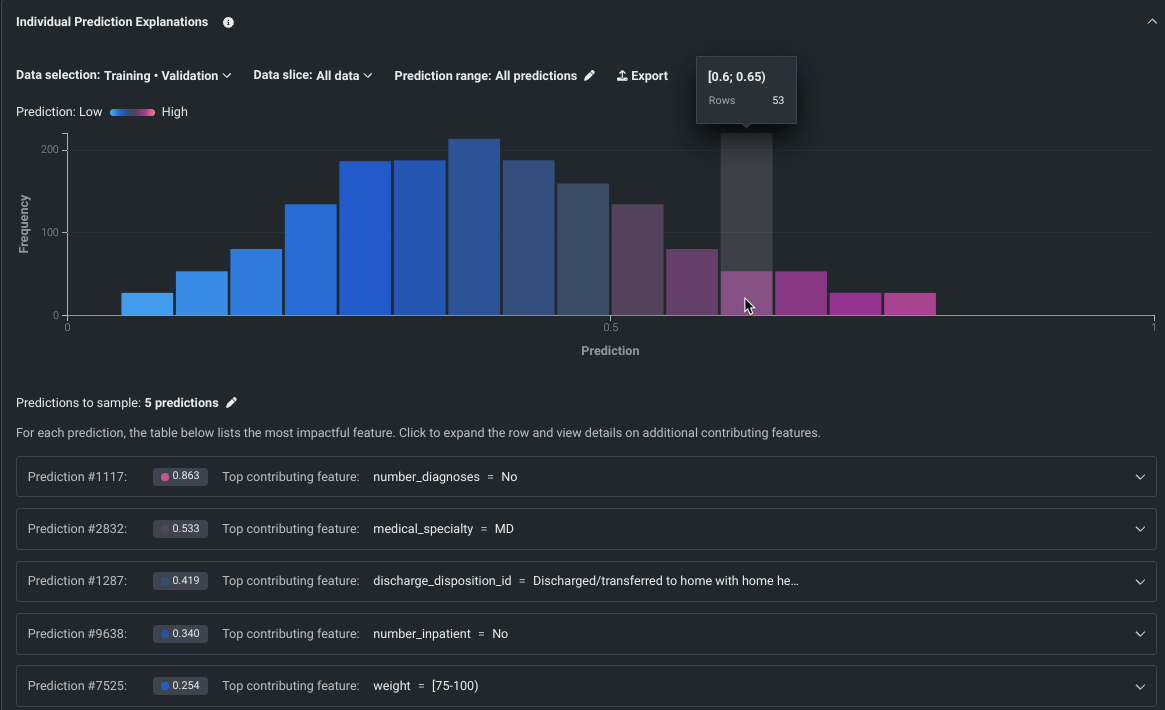

SHAP-based Individual Prediction Explanations in Workbench¶

SHAP-based explanations help to understand what drives predictions on a row-by-row basis by providing an estimation of how much each feature contributes to a given prediction differing from the average. With their introduction to Workbench, SHAP explanations are available for all model types; XEMP-based explanations are not available in Use Case experiments. Use the controls in the insight to set the data partition, apply slices, and set a prediction range.

Note

To better communicate this feature’s functionality as a local explanation method that calculates SHAP values for each individual row, we have updated the name from SHAP Prediction Explanations to Individual Prediction Explanations.

Preview documentation.

Feature flag ON by default: Universal SHAP in NextGen

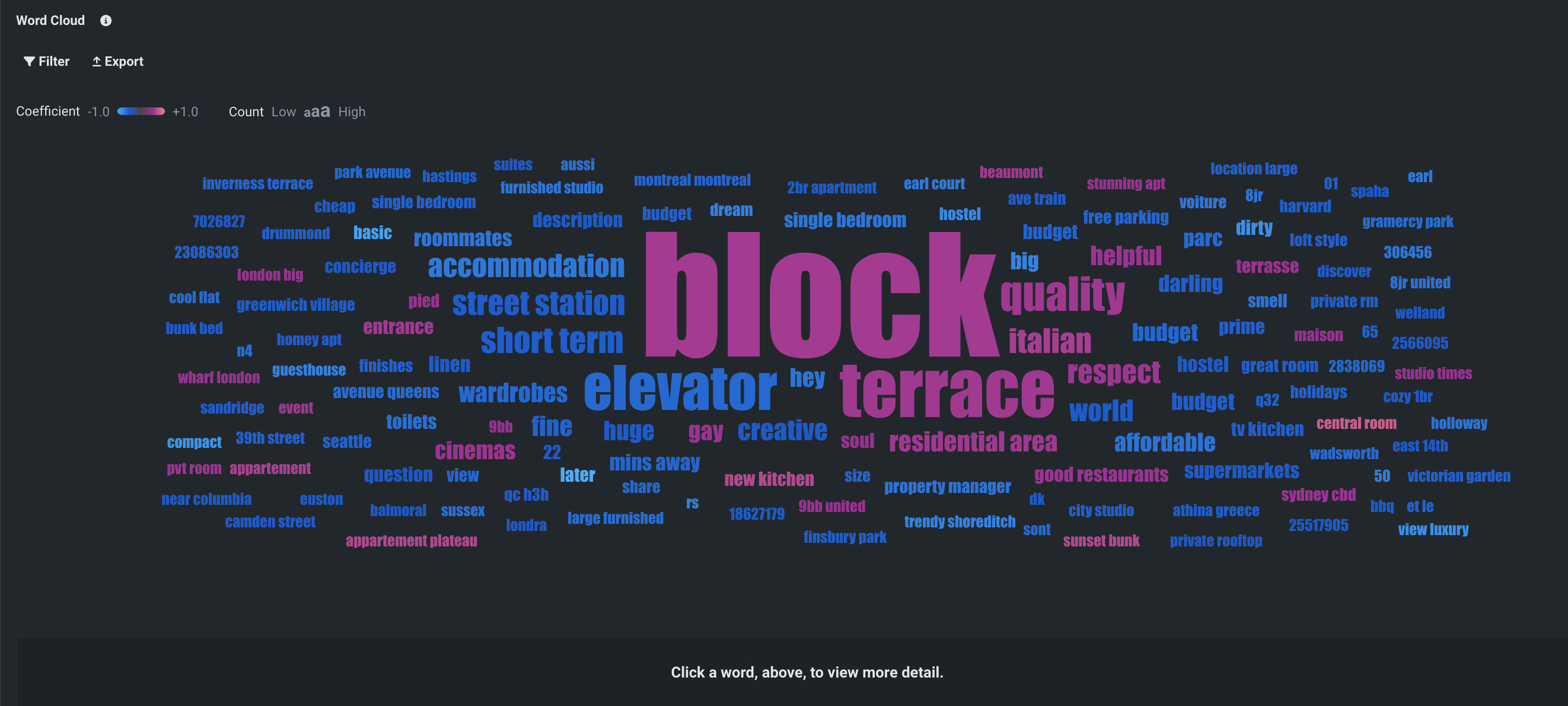

Word Cloud now available in Workbench¶

Word Cloud, a text-based insight for classification and regression projects, is now available as preview in Workbench. It displays up to 200 of the most impactful words and short phrases, helping to understand the correlation of a word to the target. When viewing the Word Cloud, you can view individual word details, filter the display, and export the insight.

Preview documentation.

Feature flag ON by default: Word Cloud in Workbench

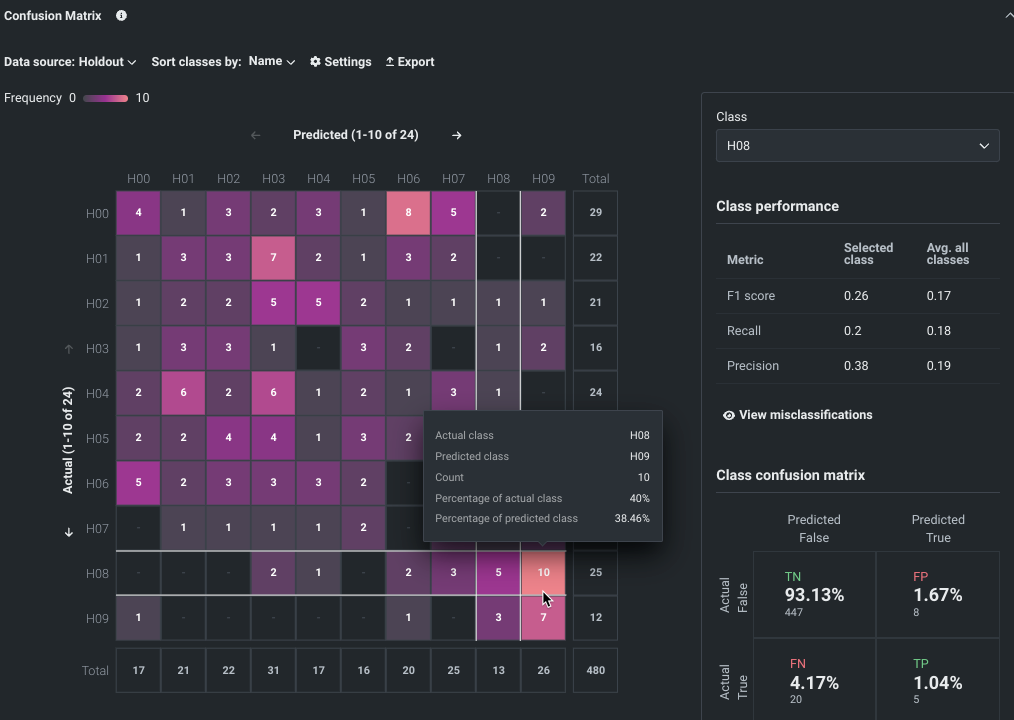

Multiclass experiments now available in Workbench¶

Available as a preview feature, you can now create multiclass experiments in Workbench. DataRobot determines the experiment type based on the number of values for a given target feature. If more than two, the experiment is handled as either multiclass or regression (for numeric targets). Special handling by DataRobot allows:

- Changing a regression experiment to multiclass.

- Using aggregation, with configurable settings, to support more than 1000 classes.

Once a model is built, a multiclass confusion matrix helps to visualize where the model is, perhaps, mislabeling one class as another.

Preview documentation.

Feature flag ON by default: Unlimited multiclass

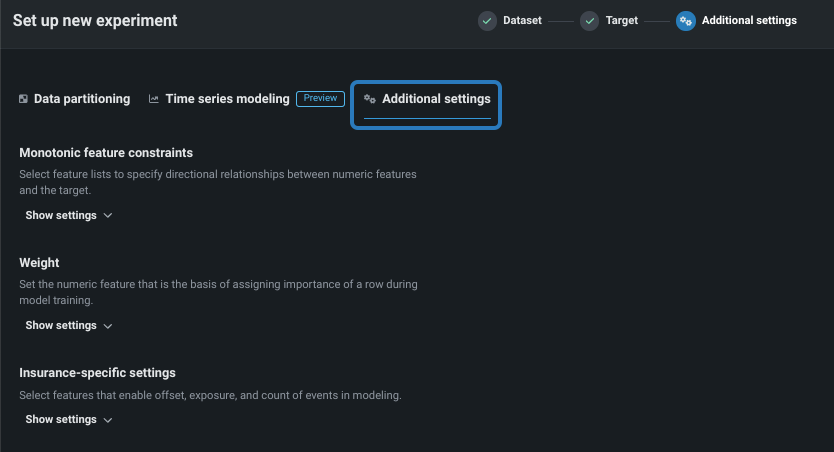

New configuration options added to Workbench¶

This deployment adds several Public Preview settings during experiment setup, covering many common cases for users. New settings include the ability to:

- Change the modeling mode. Previously Workbench ran quick Autopilot only, now you can set the mode to manual mode (for building via the blueprint repository) or Comprehensive mode (not available for time-aware).

- Change the optimization metric—the metric that defines how DataRobot scores your models—from the metric selected by DataRobot to any supported metric appropriate for your experiment.

- Configure additional settings, such as offset/weight/exposure, monotonic feature constraints, and positive class selection.

Preview documentation.

Feature flag ON by default: UXR Advanced Modeling Options

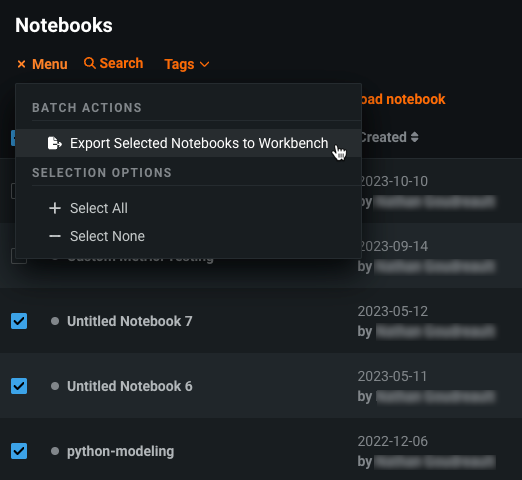

Migrate projects and notebooks from DataRobot Classic to NextGen¶

Now available for preview, DataRobot allows you to transfer projects and notebooks created in DataRobot Classic to DataRobot NextGen. You can export projects in DataRobot Classic and add them to a Use Case in Workbench as an experiment. Notebooks can also be exported from Classic and added to a Use Case in Workbench.

Preview documentation.

Feature flag OFF by default: Enable Asset Migration

Compute Prediction Explanations for data in OTV and time series projects¶

Now available for preview, you can compute Prediction Explanations for time series and OTV projects. Specifically, you can get XEMP Prediction Explanations for the holdout partition and sections of the training data. DataRobot only computes Prediction Explanations for the validation partition of backtest one in the training data.

Preview documentation.

Feature flag OFF by default: Enable Prediction Explanations on training data for time-aware Projects

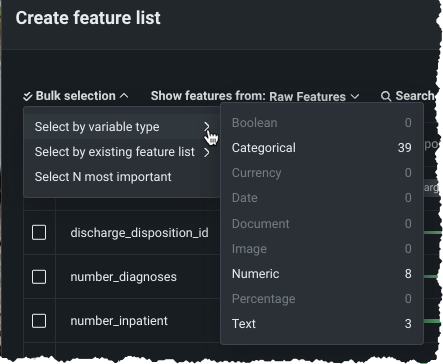

Create custom feature lists in existing experiments¶

In Workbench, you can now add new, custom feature lists to an existing predictive or forecasting experiment through the UI. DataRobot automatically creates several feature lists, which control the subset of features that DataRobot uses to build models and make predictions, on data ingest. Now, you can create your own lists from the Feature Lists or Data tabs in the Experiment information window accessed from the Leaderboard. Use bulk selections to choose multiple features with a single click:

Preview documentation.

Feature flags ON by default: Enable Data and Feature Lists tabs in Workbench, Enable Feature Lists in Workbench Preview, Enable Workbench Feature List Creation

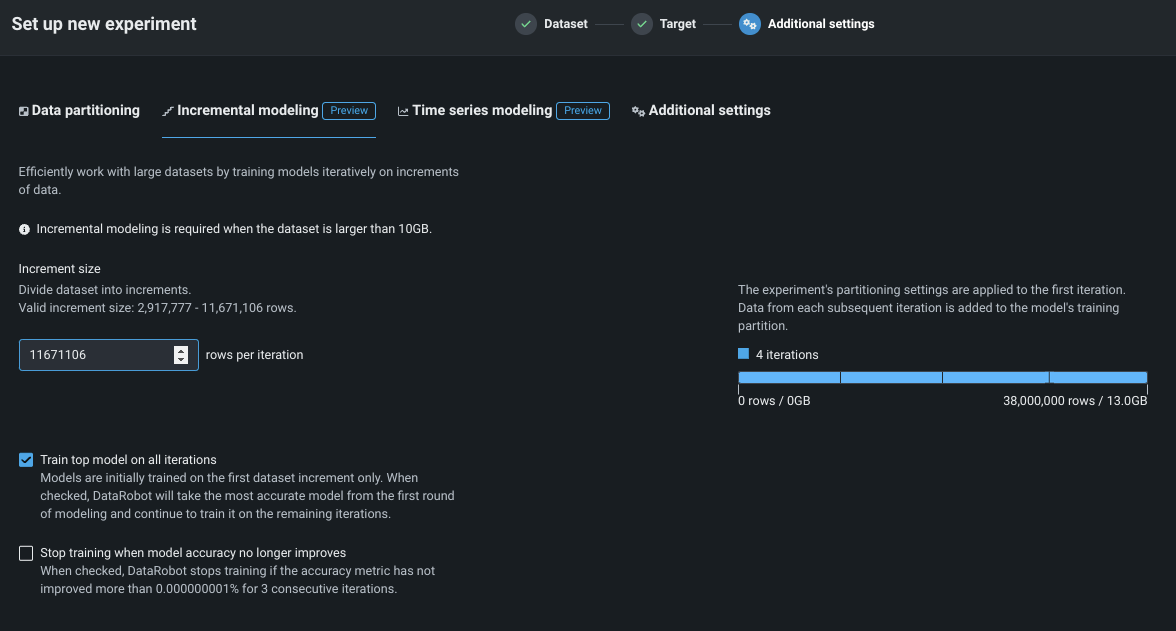

Scalability up to 100GB now available with incremental learning¶

Available as a preview feature, you can now train models on up to 100GB of data using incremental learning (IL) for binary and regression project types. IL is a model training method specifically tailored for large datasets that chunks data and creates training iterations. Configuration options allow you to control whether a top model trains on one—or all—iterations and whether training stops if accuracy improvements plateau. After model building begins, you can compare trained iterations and optionally assign a different active version or continue training. The active iteration is the basis for other insights and is used for making predictions.

Preview documentation.

Feature flags OFF by default:: Enable Incremental Learning, Enable Data Chunking

Apps¶

See Chat generation Q&A application in the MLOps enhancements.

Admin enhancements¶

GA¶

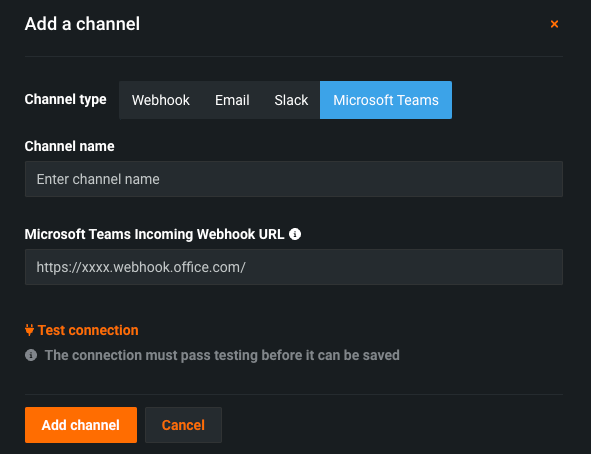

Add a Microsoft Teams notification channel¶

Admins can now configure a notification channel for Microsoft Teams. Notification channels are mechanisms for delivering notifications created by admins. You may want to set up several channels for each type of notification; for example, a webhook with a URL for deployment-related events, and a webhook for all project-related events.

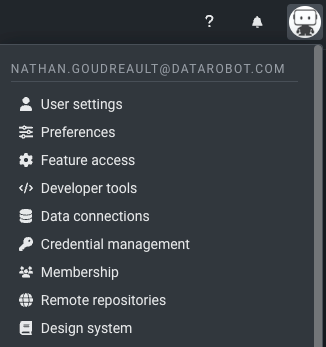

Updated user settings interface¶

As part of DataRobot NextGen, DataRobot has updated the interface for user settings, including data connection and developer tools pages. Some settings have been renamed and the configuration pages have been repainted. To view the updated settings, select your user icon and browse the settings in the menu.

Single-tenant SaaS¶

DataRobt has released a single-tenant SaaS solution, featuring a public internet access networking option. With this enhancement, you can seamlessly leverage DataRobot's AI capabilities while securely connecting across the web, unlocking unprecedented flexibility and scalability.

All product and company names are trademarks™ or registered® trademarks of their respective holders. Use of them does not imply any affiliation with or endorsement by them.