Databricks¶

The Databricks connector allows you to access data in Databricks on Azure or AWS.

Supported authentication¶

- Personal access token

- Service principal

Prerequisites¶

In addition to either a personal access token or service principal for authentication, the following is required before connecting to Databricks in DataRobot:

- A Databricks workspace in the Azure Portal app

- Data stored in an Azure Databricks database

- A Databricks workspace in AWS

- Data stored in an AWS Databricks database

Generate a personal access token¶

In the Azure Portal app, generate a personal access token for your Databricks workspace. This token will be used to authenticate your connection to Databricks in DataRobot.

See the Azure Databricks documentation.

In AWS, generate a personal access token for your Databricks workspace. This token will be used to authenticate your connection to Databricks in DataRobot.

See the Databricks on AWS documentation.

Create a service principal¶

In the Azure Portal app, create an service principal for your Databricks workspace. The resulting client ID and client secret will be used to authenticate your connection to Databricks in DataRobot.

See the Azure Databricks documentation. In the linked instructions, copy the following information:

- Application ID:Entered in the client ID field during setup in DataRobot.

- OAuth secrets:Entered in the client secret field during setup in DataRobot.

Make sure the service principal has permission to access the data you want to use.

In AWS, create an service principal for your Databricks workspace. The resulting client ID and client secret will be used to authenticate your connection to Databricks in DataRobot.

See the Azure Databricks documentation.

Make sure the service principal has permission to access the data you want to use.

Set up a connection in DataRobot¶

To connect to Databricks in DataRobot (note that this example uses Azure with an access token):

- Open Workbench and select a Use Case.

- Follow the instructions for connecting to a data source.

-

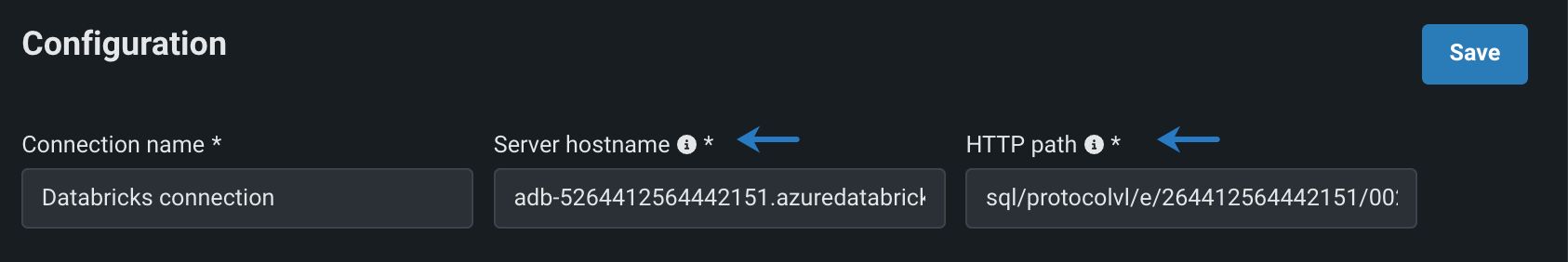

With the information retrieved in the previous section, fill in the required configuration parameters.

-

Under Authentication, click New credentials. Then, enter your access token and a unique display name. If you've previously added credentials for this data source, you can select it from your saved credentials.

If you selected service principal as the authentication method, enter the client ID, client secret, and a unique display name.

-

Click Save.

Required parameters¶

The table below lists the minimum required fields to establish a connection with Databricks:

| Required field | Description | Documentation |

|---|---|---|

| Server Hostname | The address of the server to connect to. | Azure Databricks documentation |

| HTTP Path | The compute resources URL. | Azure Databricks documentation |

| Required field | Description | Documentation |

|---|---|---|

| Server Hostname | The address of the server to connect to. | Databricks on AWS documentation |

| HTTP Path | The compute resources URL. | Databricks on AWS documentation |

SQL warehouses are dedicated to execute SQL, and as a result, have less overhead than clusters and often provide better performance. It is recommended to use a SQL warehouse if possible.

Note

If the catalog parameter is specified in a connection configuration, Workbench will only show a list of schemas in that catalog. If this parameter is not specified, Workbench lists all catalogs you have access to.

Troubleshooting¶

| Problem | Solution | Instructions |

|---|---|---|

| When attempting to execute an operation in DataRobot, the firewall requests that you clear the IP address each time. | Add all allowed IPs for DataRobot. | See Allowed source IP addresses. If you've already added the allowed IPs, check the existing IPs for completeness. |

Feature considerations¶

Note that predictions are not available using the native Databricks connector. You must connect to Databricks using the JDBC driver.