LLM blueprint comparison¶

The playground's Comparison page allows you to:

The playground's Comparison page allows you to:

- View all LLM blueprints in the playground.

- Filter, group, and sort the LLM blueprint list.

- View the playground's chat history.

- Create and compare chats (LLM responses).

To use the comparison:

- Create two or more LLM blueprints in the playground.

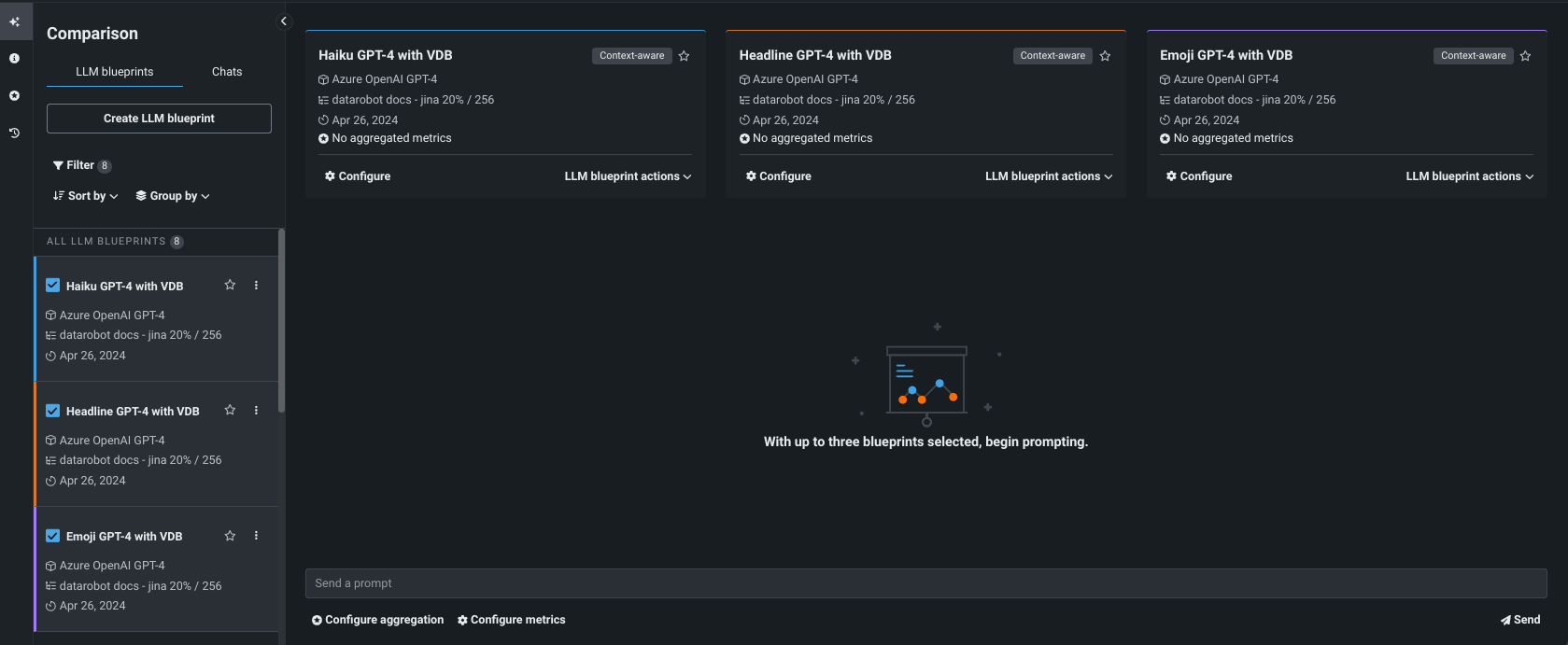

- From the Comparison > LLM blueprints tab, select up to three LLM blueprints for comparison.

- Send a prompt from the central prompting window. Each of the blueprints receives the prompt and responds, allowing you to compare responses.

Note

You can only do comparison prompting with LLM blueprints that you created. To see the results of prompting another user’s LLM blueprint in a shared Use Case, copy the blueprint and then you can chat with the same settings applied. This is intentional behavior because prompting a an LLM blueprint impacts the chat history, which can impact the responses that are generated. However, you can provide response feedback to assist development.

Example comparison¶

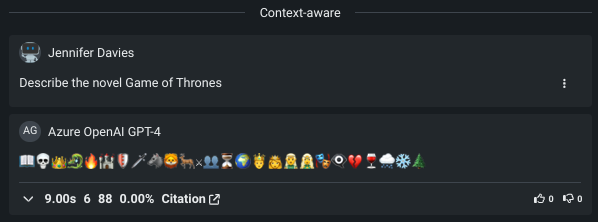

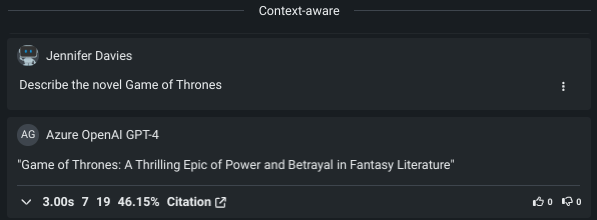

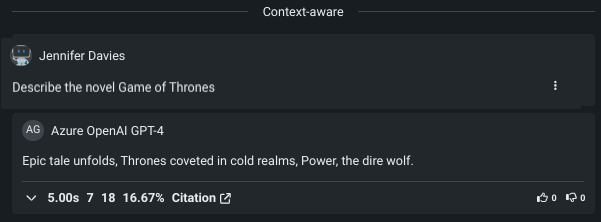

The following example compares three LLM blueprints, each with the same settings except using a different system prompt to influence the style of response. First test the system prompt, when configuring the LLM blueprint, for example: Describe the novel Game of Thrones.

-

Enter the system prompt

Answer using emojis.

-

Enter the system prompt

Answer in the style of a news headline.

-

Enter the system prompt

Answer as a haiku.

See also the note on system prompts.

Compare LLM blueprints¶

To compare multiple LLM blueprints:

-

Navigate to the Comparison tab and check the box next to each blueprint you want to compare.

-

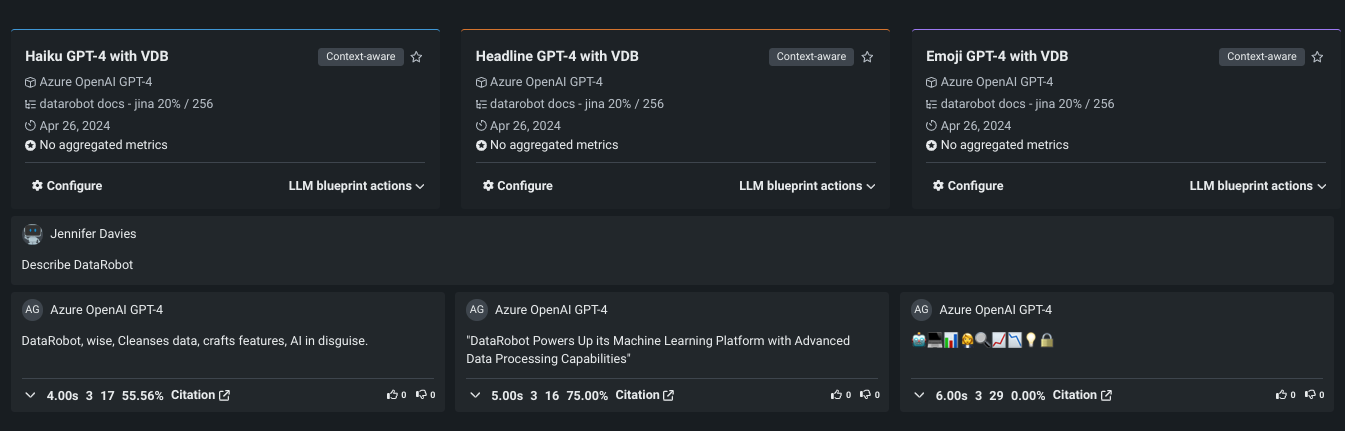

Send a prompt (

Describe DataRobot). Each LLM blueprint responds in their configured style:

-

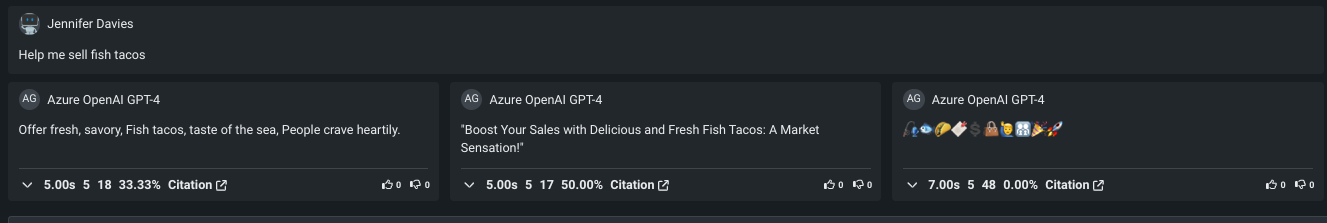

Try different prompts (

Describe a fish taco, for example) to identify the LLM that best suits the business use case.

Interpret results¶

One obvious way to compare LLM blueprints is to read the results and see if the responses of one seem more on point. Another helpful measure is to review the evaluation metrics that are returned. Consider:

- Which LLM blueprint has the lowest latency? Is that status consistent across prompt/response sets?

- Which metrics are excluded from some LLM blueprints and why?

- How do results change when you toggle context awareness?

- Do the LLM blueprints use the citations to inform the response effectively?

- Do the they respect the system prompt such that the response has the requested tone, format, succinctness, etc.?

Change selected LLM blueprints¶

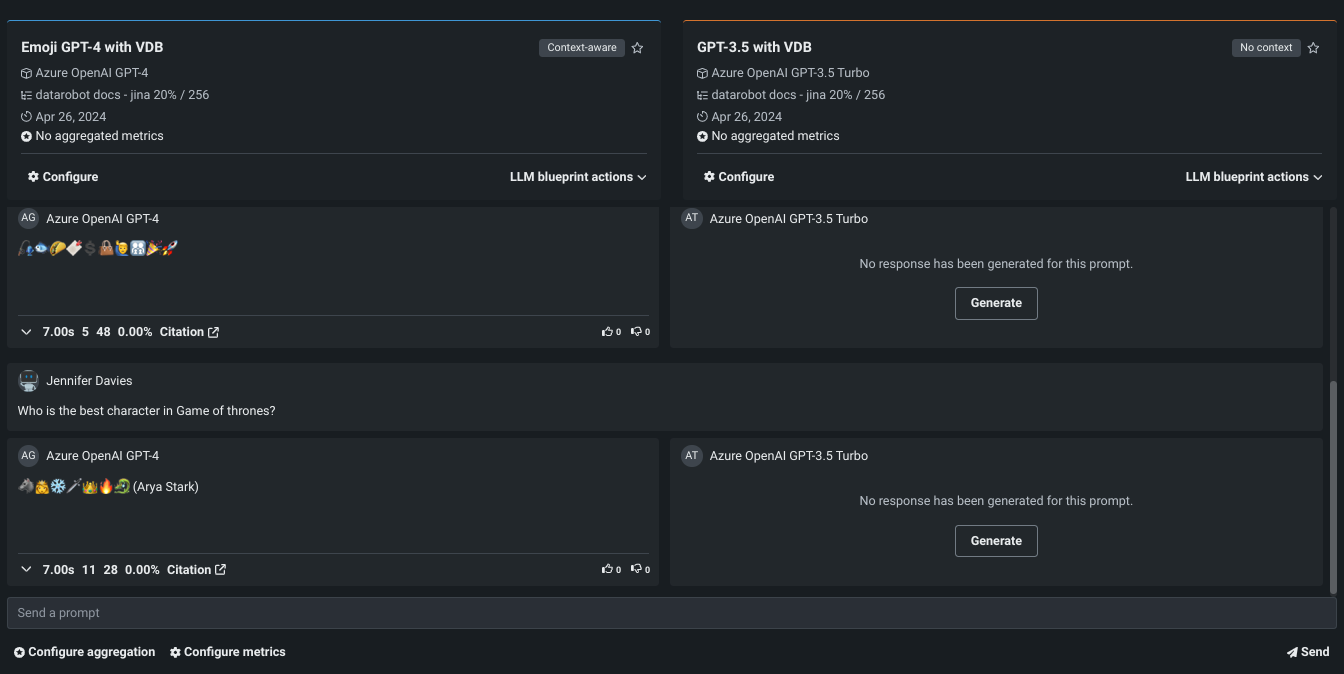

You can add blueprints to the comparison at any time, although the maximum allowed for comparison at one time is three. To add an LLM blueprint, select the checkbox to the left of its name. If three are already selected, remove a current selection first.

The Comparison tab retrieves the comparison history. Because responses have not been returned for the new LLM blueprint, DataRobot provides a button to initiate that action. Click Generate to include the new results.

Consider system prompts¶

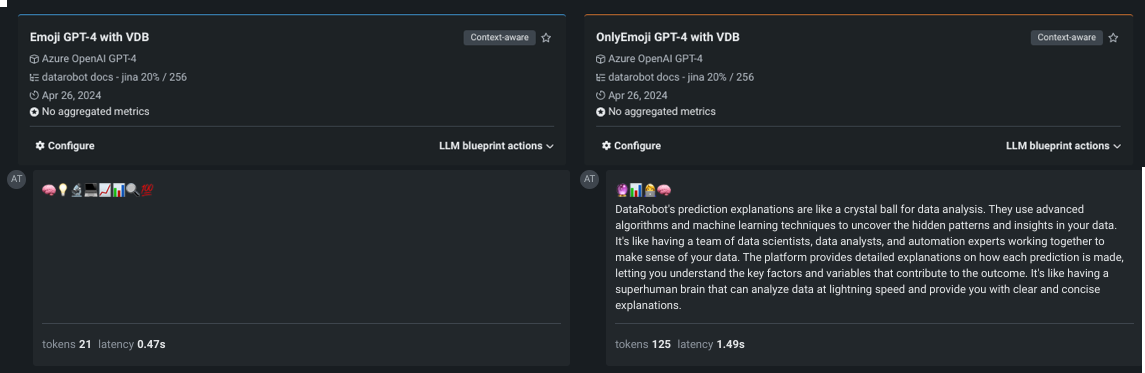

Note that system prompts are not guaranteed to be followed completely, and that wording is very important. For example, consider the comparison using the prompt Answer using emojis (EmojiGPT) and Answer using only emojis (OnlyEmojiGPT):

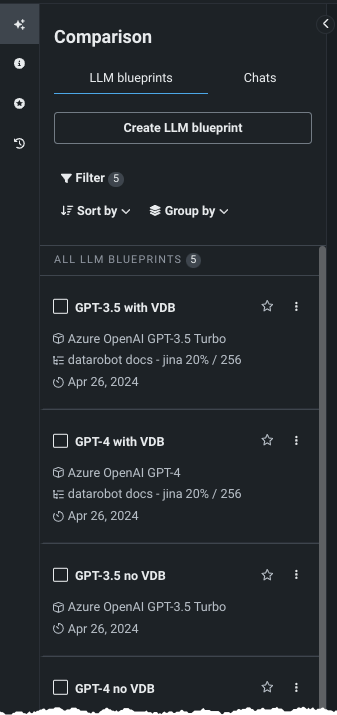

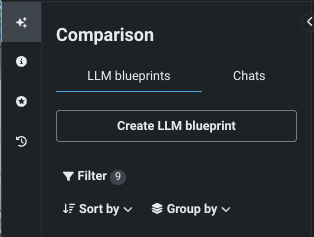

Tabs and controls¶

The left panel of the Comparison page is like a file cabinet of the playground's assets—a list of configured blueprints and a record of the comparison chat history.

You can also create a new LLM blueprint from this area.

LLM blueprints tab¶

The LLM blueprints tab lists all LLM blueprints configured within the playground. It is from this panel that you select LLM blueprints—up to three—for comparison. As you select an LLM blueprint via the checkbox, it becomes available in the middle comparison panel. Click the star next to an LLM blueprint name to "favorite" it, which you can later filter on.

You can also take a variety of actions on the configured blueprints.

Display controls¶

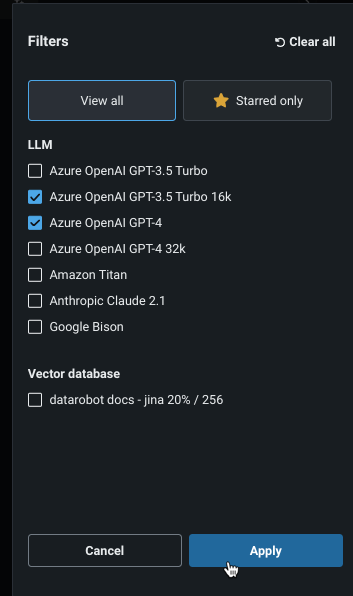

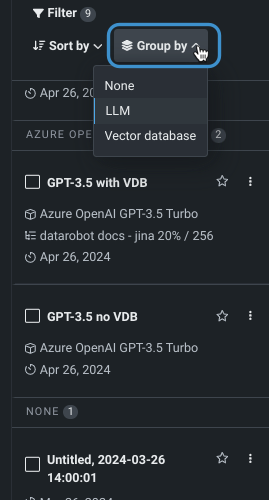

Use the controls to modify the LLM blueprint listing :

The Filter option controls which blueprints are listed in the panel, either by base LLM or status:

The small number to the right of the Filter label indicates how many blueprints are displayed as a result of any (or no) applied filtering.

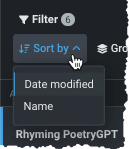

Sort by controls the ordering of the blueprints. It is additive, meaning that it is applied on top of any filtering or grouping in place:

Group by, also additive, arranges the display by the selected criteria. Labels indicate the group "name" with numbers to indicate the number of member blueprints.

Chats tab¶

A comparison chat groups together one or more comparison prompts, often across multiple blueprints. Use the Chats tab to access any previous comparison prompts made from the playground or start a new chat. In this way, you can select up to three LLM blueprints, query them, and then swap out for other LLM blueprints to send the same prompts and compare results.

Note

In some cases, you will see a chat named Default chat. This entry contains any chats made in the playground before the new playground functionality was released in April, 2024. If no chats were initiated, the default chat is empty. If the playground was created after that date, the default chat isn't present but an New chat is available for prompting.

Rename or delete chats from the entry name.