Deploy an LLM from the playground¶

From an LLM playground in a Use Case, add an LLM blueprint to the Registry to prepare the LLM for use in production. After creating a draft LLM blueprint, setting the blueprint configuration (including the base LLM and, optionally, a system prompt and vector database), and testing and tuning the responses, the blueprint is ready for registration and deployment. Save the draft as a blueprint and add it to the Registry's model workshop as a custom model with the text generation target type:

-

In a Use Case, on the Playgrounds tab, click the playground containing the LLM you want to save as a blueprint.

-

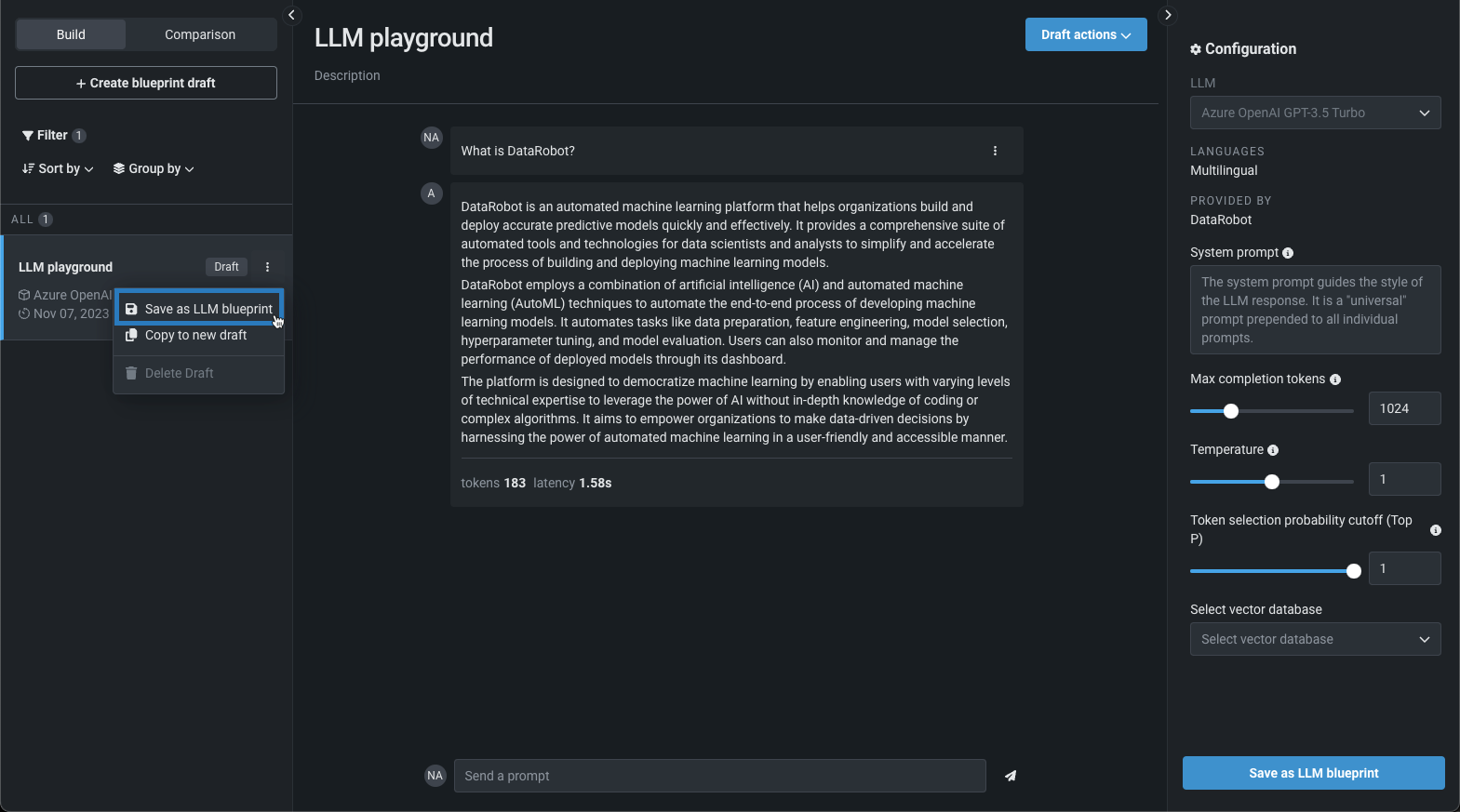

Click the blueprint draft to save, click the actions menu (), and then click Save as LLM blueprint. You can also click Save as LLM blueprint in Draft actions or in the Configuration sidebar.

The blueprint draft is now an LLM blueprint. Once saved, no further changes can be made to the blueprint.

-

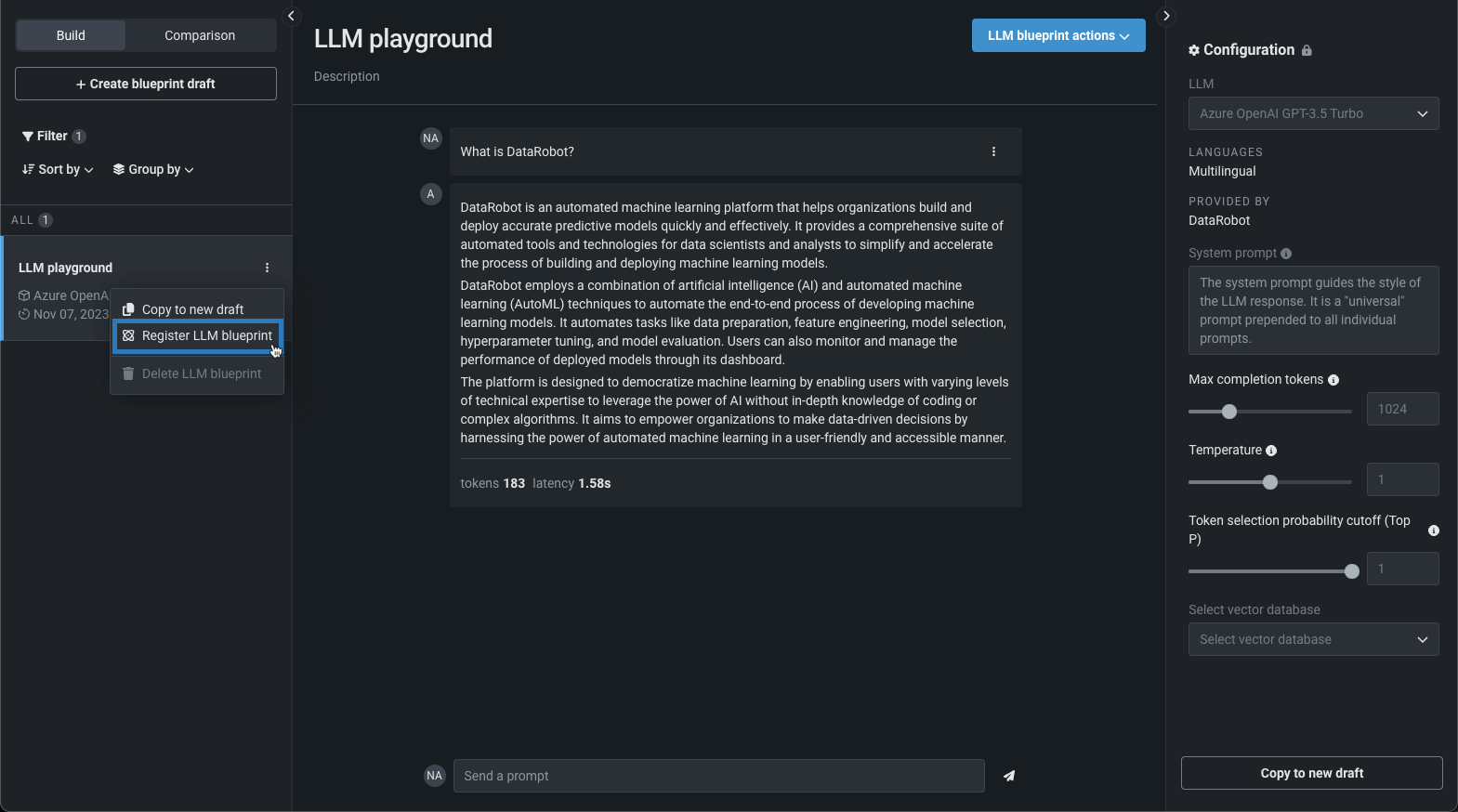

Click the actions menu (), and then click Register LLM blueprint. You can also click LLM blueprint actions > Register LLM blueprint.

-

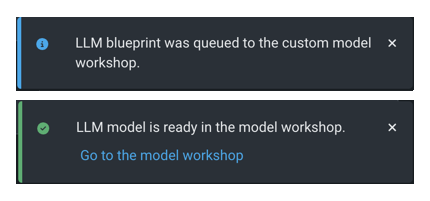

In the lower-left corner of the LLM playground, notifications appear as the LLM is queued and registered. When the registration notification appears, click Go to the LLM blueprint in Registry:

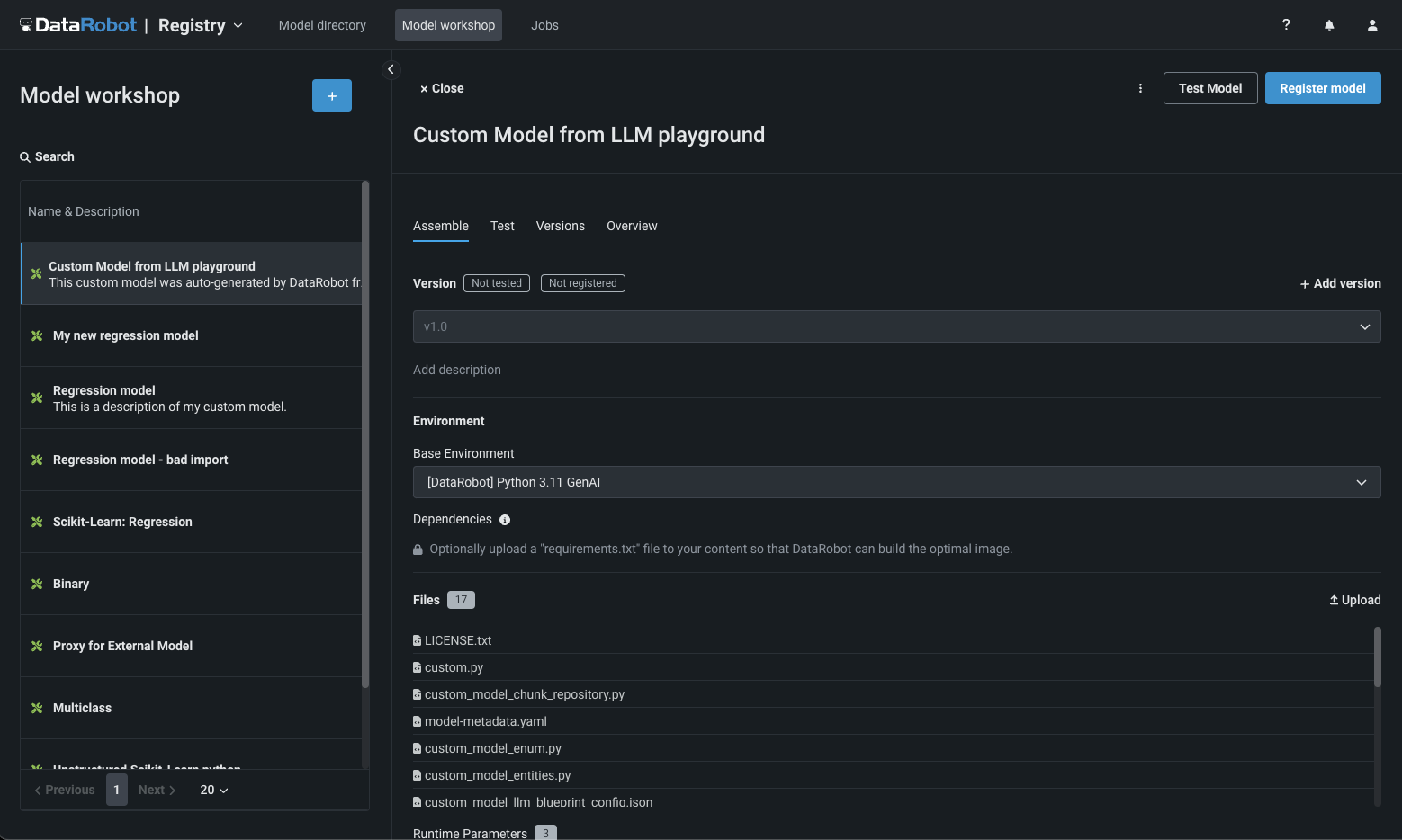

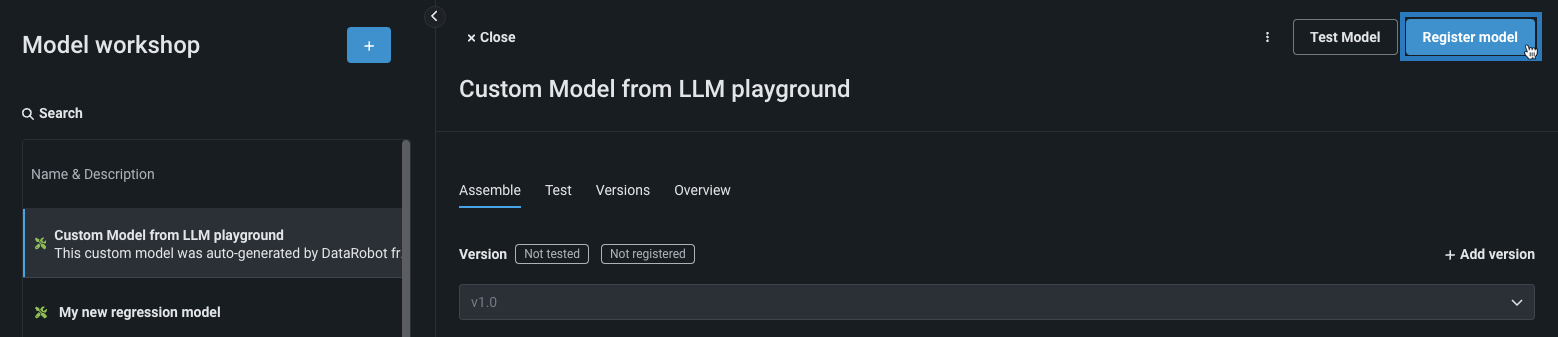

The LLM blueprint opens in the Registry's model workshop as a custom model with the Text Generation target type:

-

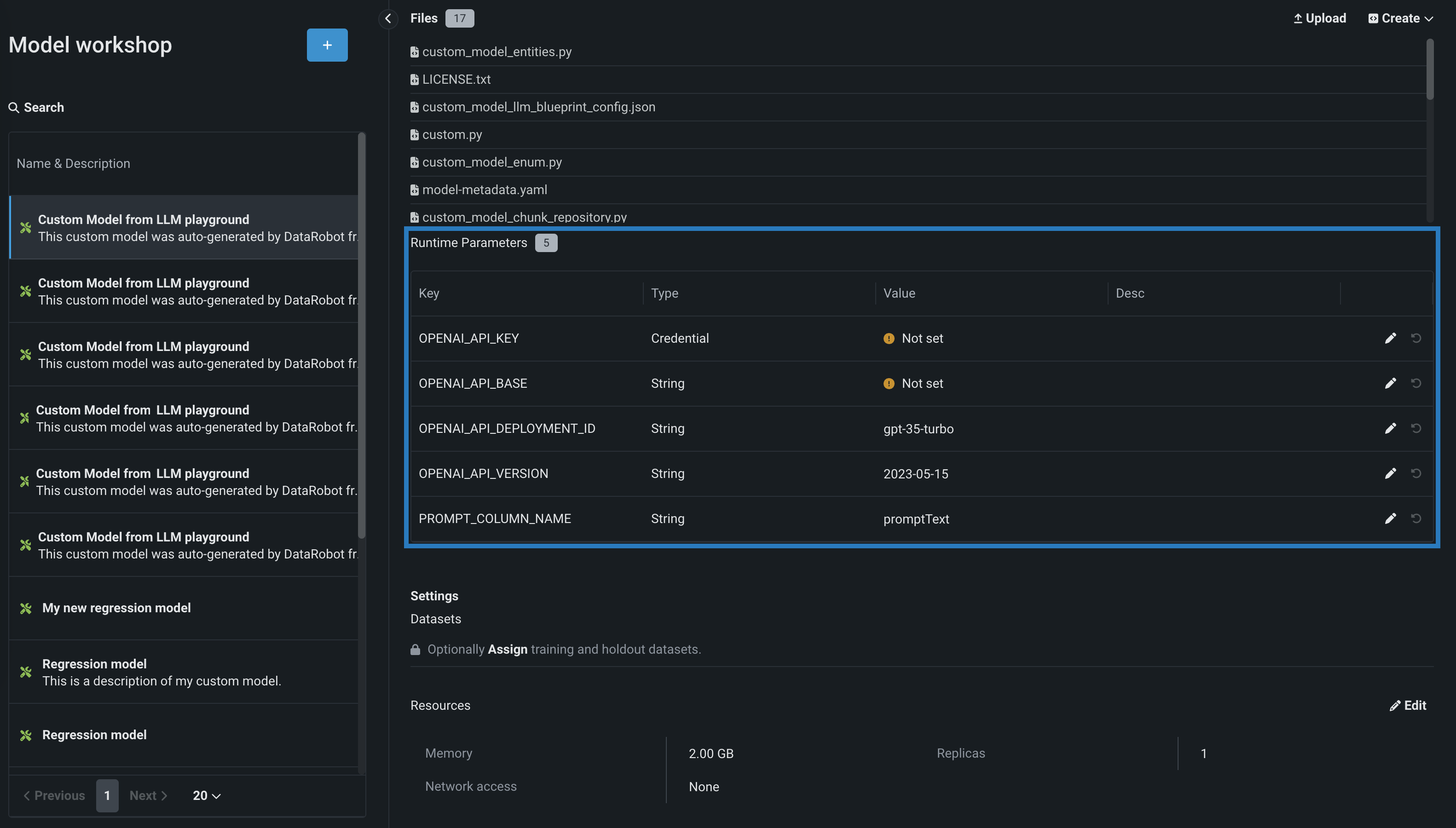

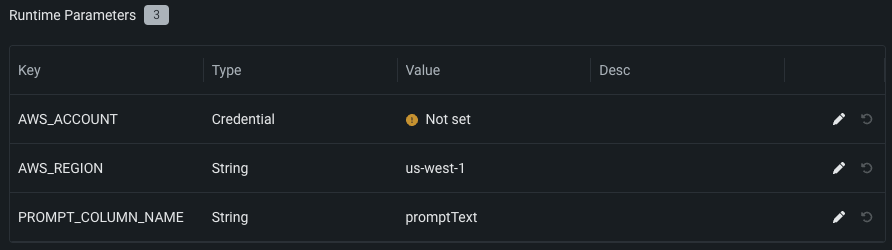

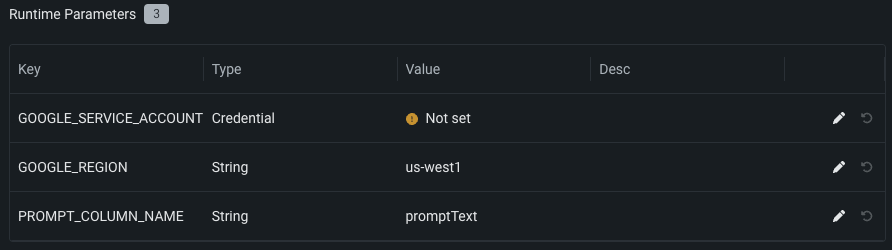

On the Assemble tab, in the Runtime Parameters section, configure the key-value pairs required by the LLM, including the LLM service's credentials and other details. To add these values, click the edit icon () next to the available runtime parameters.

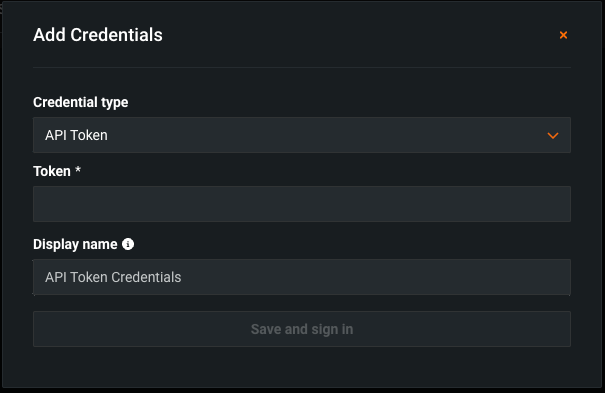

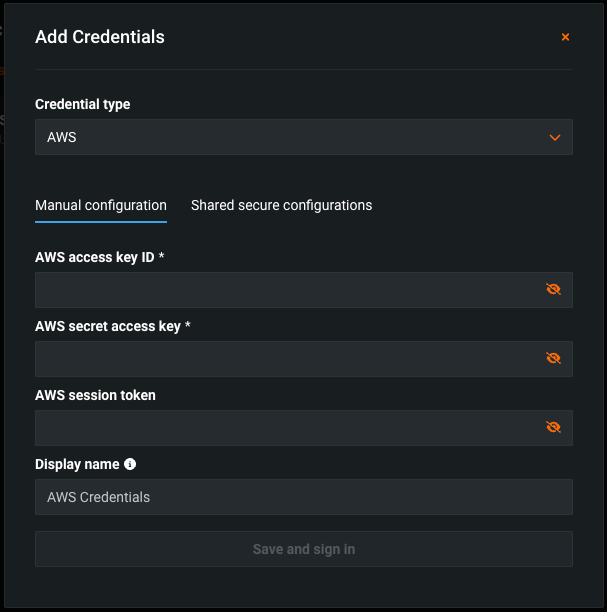

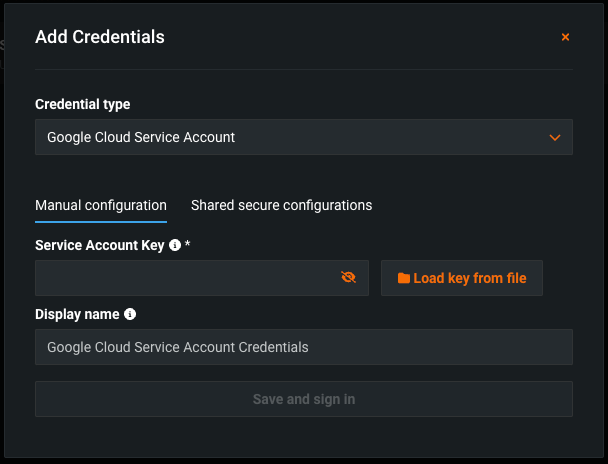

To configure Credential type Runtime Parameters, first, add the credentials required for the LLM you're deploying to the Credentials Management page of the DataRobot platform:

Microsoft-hosted LLMs: Azure OpenAI GPT-3.5 Turbo, Azure OpenAI GPT-3.5 Turbo 16k, Azure OpenAI GPT-4, and Azure OpenAI GPT-4 32k

Credential type: API Token (not Azure)

The required Runtime Parameters are:

Key Description OPENAI_API_KEY Select the API Token credential, created on the Credentials Management page, for the Azure OpenAI LLM API endpoint. OPENAI_API_BASE Enter the URL for the Azure OpenAI LLM API endpoint. OPENAI_API_DEPLOYMENT_ID Enter the name of the Azure OpenAI deployment of the LLM, chosen when deploying the LLM to your Azure environment. For more information, see the Azure OpenAI documentation on how to Deploy a model. The default deployment name suggested by DataRobot matches the ID of the LLM in Azure OpenAI (for example, gpt-35-turbo). Modify this parameter if your Azure OpenAI deployment is named differently. OPENAI_API_VERSION Enter the Azure OpenAI API version to use for this operation, following the YYYY-MM-DD or YYYY-MM-DD-preview format (for example, 2023-05-15). For more information on the supported versions, see the Azure OpenAI API reference documentation. PROMPT_COLUMN_NAME Enter the prompt column name from the input .csv file. The default column name is promptText. Amazon-hosted LLM: Amazon Titan

Credential type: AWS

The required Runtime Parameters are:

Key Description AWS_ACCOUNT Select an AWS credential, created on the Credentials Management page, for the AWS account. AWS_REGION Enter the AWS region of the AWS account. The default is us-west-1. PROMPT_COLUMN_NAME Enter the prompt column name from the input .csv file. The default column name is promptText. Google-hosted LLM: Google Bison

Credential type: Google Cloud Service Account

The required Runtime Parameters are:

Key Description GOOGLE_SERVICE_ACCOUNT Select a Google Cloud Service Account credential created on the Credentials Management page. GOOGLE_REGION Enter the GCP region of the Google service account. The default is us-west-1. PROMPT_COLUMN_NAME Enter the prompt column name from the input .csv file. The default column name is promptText. -

In the Settings section, ensure Network access is set to Public.

-

After you complete the custom model assembly, you can test the model or create new versions. DataRobot recommends testing custom LLMs before deployment.

-

Next, click Register model, provide the registered model or version details, and then click Register model again to add the custom LLM to the Registry.

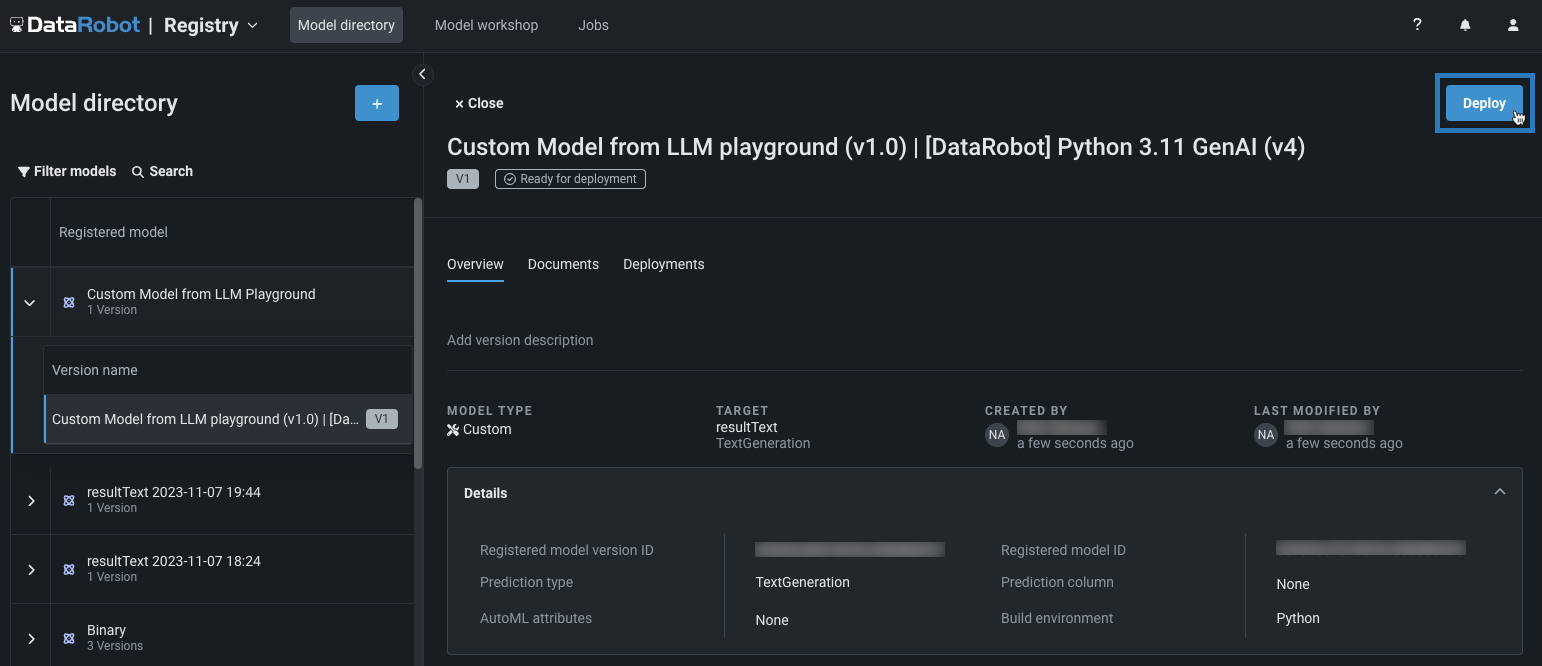

The registered model version opens in the Model directory.

-

From the Model directory, in the upper-right corner of the registered model version panel, click Deploy and configure the deployment settings.

For more information on the deployment functionality available for generative models, see Monitoring support for generative models.

For more information on this process, see the Playground deployment considerations.