Model recommendation process¶

DataRobot provides an option to set the Autopilot modeling process to recommend a model for deployment. If you have enabled the Recommend and prepare a model for deployment option, one of the models—the most accurate individual, non-blender model—is selected and then prepared for deployment.

Note

When using Workbench there is no model tagged with the Recommended for Deployment badge. This is because when comparing models across experiments on a multiproject Leaderboard, multiple models would be assigned the badge. DataRobot does prepare a model for each experiment however.

The following tabs describe the process for each modeling mode when the Recommend and prepare a model for deployment option is enabled.

The following describes the model recommendation process for Quick Autopilot mode in AutoML projects. Accuracy is based on the up-to-validation sample size (typically 64%). The resulting prepared model is marked with the Recommended for Deployment and Prepared for Deployment badges. You can also select any model from the Leaderboard and initiate the deployment preparation process.

The following describes the preparation process:

-

First, DataRobot calculates Feature Impact for the selected model and uses it to generate a reduced feature list.

-

Next, the app retrains the selected model on the reduced feature list. If the new model performs better than the original model, DataRobot uses the new model for the next stage. Otherwise, the original model is used.

-

Finally, DataRobot retrains the selected model as a frozen run using a 100% sample size and selects it as Recommended for Deployment.

To apply the reduced feature list to the recommended model, manually retrain it—or any Leaderboard model—using the reduced feature list.

Depending on the size of the dataset, the insights for the recommended model are either based on the up-to-holdout model or, if DataRobot can use out-of-sample predictions, based on the 100%, recommended model.

The following describes the model recommendation process for full Autopilot and Comprehensive mode in AutoML projects. Accuracy is based on the up-to-validation sample size (typically 64%). The resulting prepared model is marked with the Recommended for Deployment and Prepared for Deployment badges. You can also select any model from the Leaderboard and initiate the deployment preparation process. You can also select any model from the Leaderboard and initiate the deployment preparation process. (Models manually prepared deployment will be only marked with the Prepared for Deployment badge.)

The following describes the preparation process:

-

First, DataRobot calculates Feature Impact for the selected model and uses it to generate a reduced feature list.

-

Next, the app retrains the selected model on the reduced feature list. If the new model performs better than the original model, DataRobot uses the new model for the next stage. Otherwise, the original model is used.

-

DataRobot then retrains the selected model at an up-to-holdout sample size (typically 80%). As long as the sample is under the frozen threshold (1.5GB), the stage is not frozen.

-

Finally, DataRobot retrains the selected model as a frozen run (hyperparameters are not changed from the up-to-holdout run) using a 100% sample size and selects it as Recommended for Deployment.

Depending on the size of the dataset, the insights for the recommended model are either based on the up-to-holdout model or, if DataRobot can use out-of-sample predictions, based on the 100%, recommended model.

The following describes the model recommendation process for OTV and time series projects in both Quick and full Autopilot mode. The backtesting process for OTV works as follows (time series always runs on 100% training samples for each backtest). See the full details here:

- In full/comprehensive mode, the recommendation process runs iterative training at 25%, 50%, and 100% training samples of each backtest.

- In Quick mode, the process uses 100% of the maximum training size.

When backtesting is finished, one of the models—the most accurate individual, non-blender model—is selected and then prepared for deployment. The resulting prepared model is marked with the Recommended for Deployment badge.

The following describes the preparation process for time-aware projects:

-

First, DataRobot calculates Feature Impact for the selected model and uses it to generate a reduced feature list.

-

Next, the app retrains the selected model on the reduced feature list.

-

If the new model performs better than the original model, DataRobot then retrains the better scoring model on the most recent data (using the same duration/row count as the original model). If using duration, and the equivalent period does not provide enough rows for training, DataRobot extends it until the minimum is met.

Note that there are two exceptions for time series models:

- Feature reduction cannot be run for baseline (naive) or ARIMA models. This is because they only use

date+naivepredictions features (i.e., there is nothing to reduce). - Because they don't use weights to train and don't need retraining, baseline (naive) models are not retrained on the most recent data.

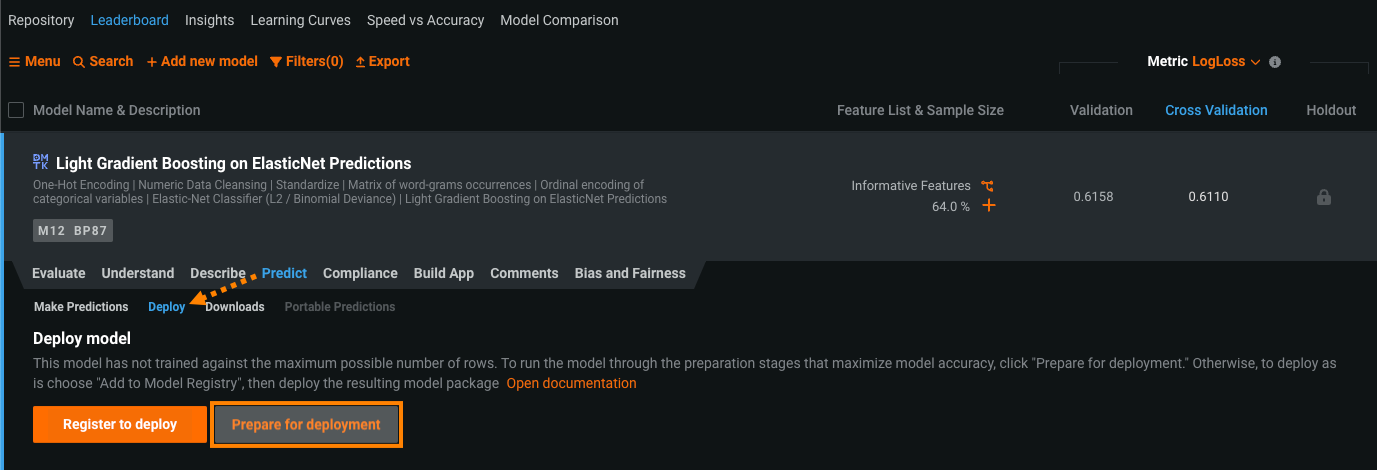

Prepare a model for deployment¶

Although Autopilot recommends and prepares a single model for deployment, you can initiate the Autopilot recommendation and deployment preparation stages for any Leaderboard model. To do so, select a model from the Leaderboard and navigate to Predict > Deploy.

Click Prepare for Deployment. DataRobot begins running the recommendation stages described above for the selected model (view progress seen in the right panel). In other words, DataRobot runs Feature Impact, retrains the model on a reduced feature list, trains on a higher sample size, and then the full sample size (for non date/time partitioned projects) or most recent data (for time-aware projects).

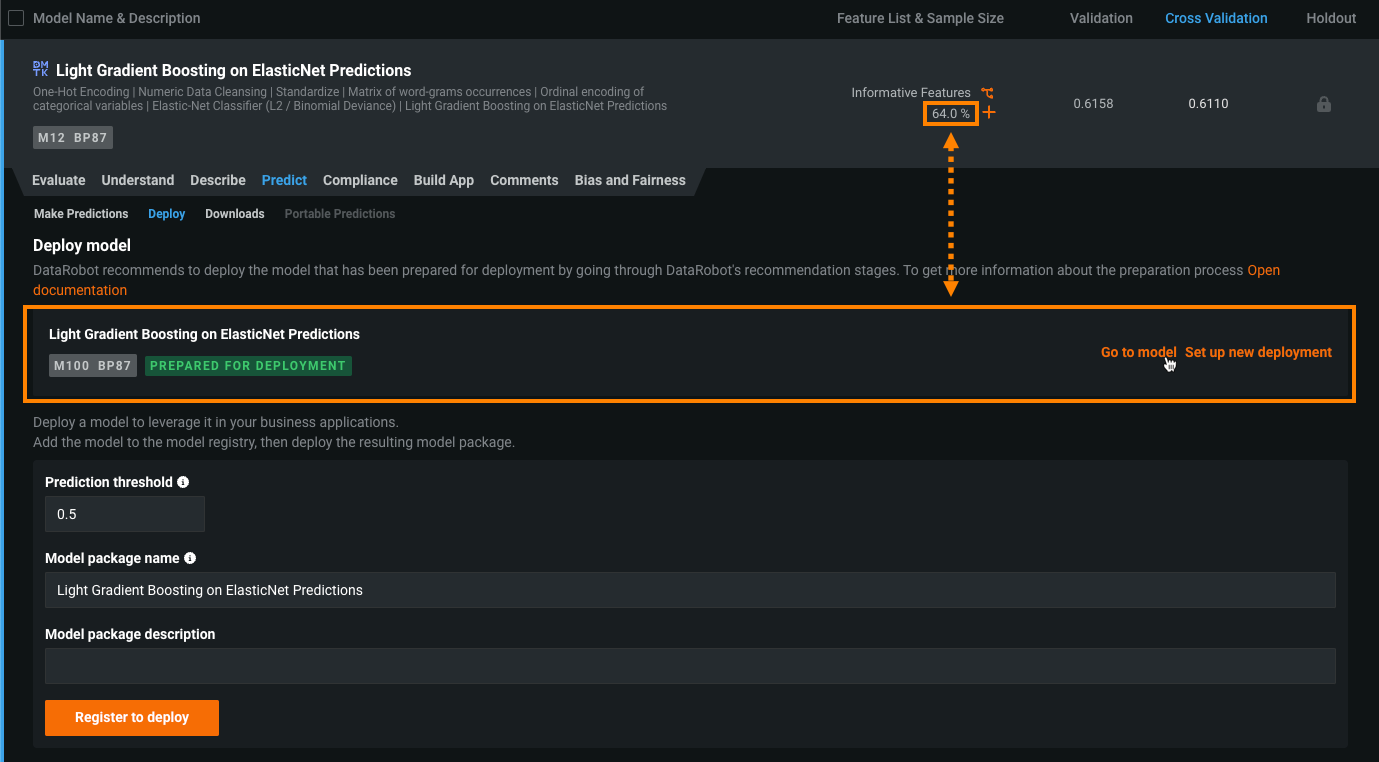

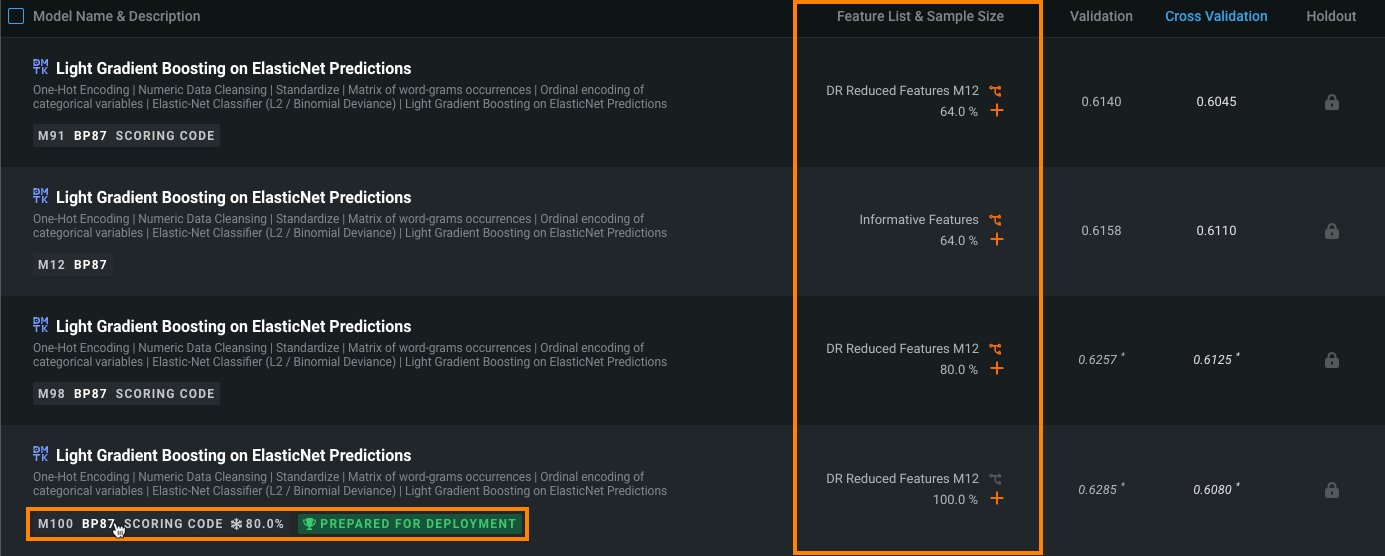

Once the process completes, DataRobot lists the prepared model, built with a 100% sample size, on the Leaderboard with the Prepared for Deployment badge. The originally recommended model also maintains its badge. From the Deploy tab of the original, limited sample size model you prepared for deployment, you can click Go to model to see the prepared, full sample size model on the Leaderboard.

Click the new model's blueprint number to see the new feature list and sample sizes associated with the process:

If you return to the model that you made the original request from (for example, the 64% sample size) and access the Deploy tab, you'll see that it is linked to the prepared model.

Notes and considerations¶

-

When retraining the final Recommended for Deployment model at 100%, it is always executed as a frozen run. This makes model retraining faster, and also ensures that the 100% model uses the same settings as the 80% model.

-

If the model that is recommended for deployment has been trained into the validation set, DataRobots unlocks and displays the Holdout score for this model, but not the other Leaderboard models. Holdout can be unlocked for the other models from the right panel.

-

If the model that is recommended for deployment has been trained into the validation set, or the project was created without a holdout partition, the ability to compute predictions using validation and holdout data is not available.

-

The heuristic logic of automatic model recommendation may differ across different projects types. For example, retraining a model with non-redundant features is implemented in regression and binary classification while retraining a model at a higher sample size is implemented in regression, binary classification, and multiclass projects.

-

If you terminate a model that is being trained on a higher sample size, or training on a higher sample size does not successfully finish, that model will not be a candidate for the Recommended for Deployment model.

Deprecated badges¶

Projects created prior to v6.1 may also have been tagged with the Most Accurate and/or Fast & Accurate badges. With improvements made to Autopilot automation, these badges are no longer necessary but are still visible, if they were assigned, to pre-v6.1 projects. Contact your DataRobot representative for code snippets that can help transition automation built around the deprecated badges.

-

The model marked Most Accurate is typically, but not always, a blender. As the name suggests, it is the most accurate model on the Leaderboard, determined by a ranking of validation or cross-validation scores.

-

The Fast & Accurate badge, applicable only to non-blender models, is assigned to the model that is both the most accurate and is the fastest to make predictions. To evaluate, DataRobot uses prediction timing from:

- a project’s holdout set.

- a sample of the training data for a project without holdout.

Not every project has a model tagged as Fast & Accurate. This happens if the prediction time does not meet the minimum speed threshold determined by an internal algorithm.