Snowflake integration¶

An integration between DataRobot and Snowflake allows joint users to both execute data science projects in DataRobot and perform computations in Snowflake as a way to optimize workload performance. Feature Discovery training and prediction workflows will push down relational inner-joins, projection, and filter operations to the Snowflake platform (via SQL). By natively conducting joins in the Snowflake database, data is filtered into smaller datasets for transfer across the network before loading into DataRobot. The smaller datasets reduce project runtimes.

To enable integration with Snowflake, the following requirements must be met:

- A Snowflake data connection is set up.

- All secondary datasets are stored in Snowflake.

- All Snowflake sources are stored in the same warehouse.

- All datasets are configured as dynamic datasets in the AI Catalog.

- You have write permissions to one of the schemas in use or one

PUBLICschema of the database in use.

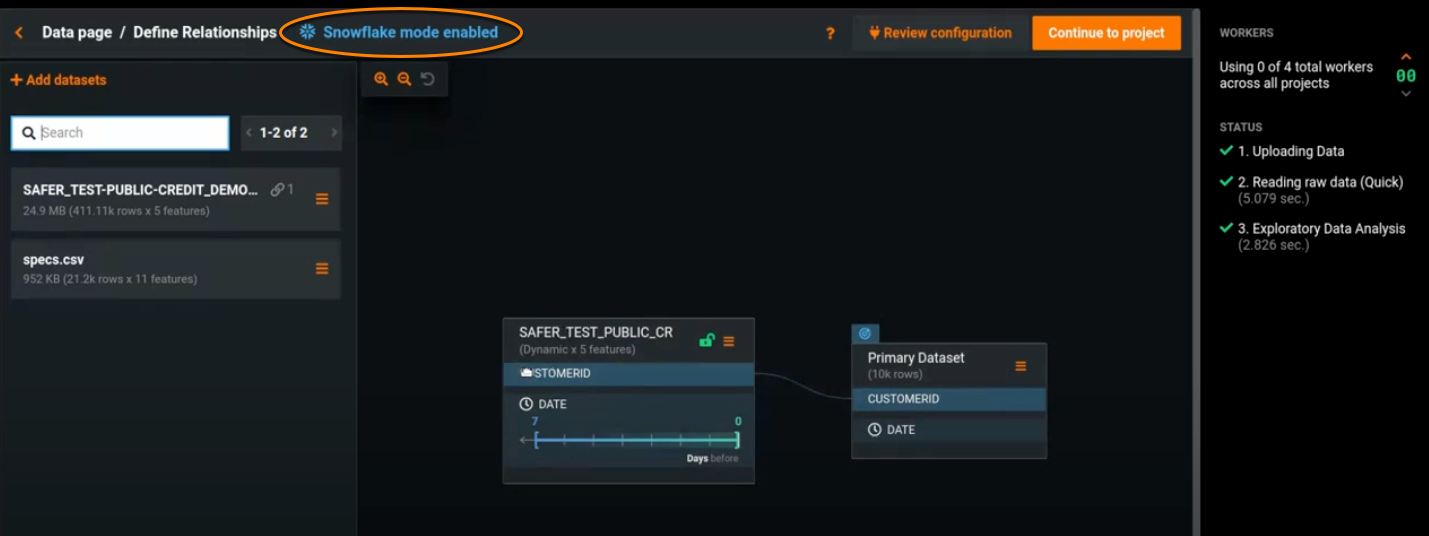

If the above requirements are met, DataRobot automatically establishes the integration and displays the Snowflake icon and Snowflake mode enabled, in blue, at the top of the Define Relationships page.