GPUs for deep learning¶

Availability information

GPU workers are a premium feature. Contact your DataRobot representative for enablement information.

Support for deep learning models, Large Language Models for example, are increasingly important in an expanding number of business use cases. While some of the models can be run on CPUs, other models require GPUs to achieve reasonable training time. To efficiently train, host, and predict using these "heavier" deep learning models, DataRobot leverages Nvidia GPUs within the application. Training on GPUs can be set per-project and when enabled, are used as part of the Autopilot process.

GPU task support¶

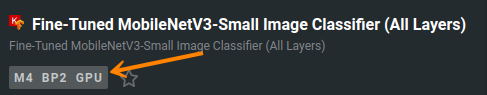

For some blueprints, there are two versions available in the repository, allowing DataRobot to train on either CPU or GPU workers. Each version is optimized to train on a the particular worker type and are marked with an identifying badge—CPU VARIANT or GPU VARIANT. Blueprints with the GPU VARIANT badge will always be trained on a GPU worker. All other blueprints will be trained on a CPU worker.

Consider the following when working with GPU blueprints:

- GPU blueprints will only be present in the repository when image or text features are available in the training data.

- In some cases, DataRobot trains GPU blueprints as part of Quick or full Autopilot. To train additional blueprints on GPU workers, you can run them manually from the repository or retrain using Comprehensive mode. (Learn about modeling modes here.)

Enable GPU workers¶

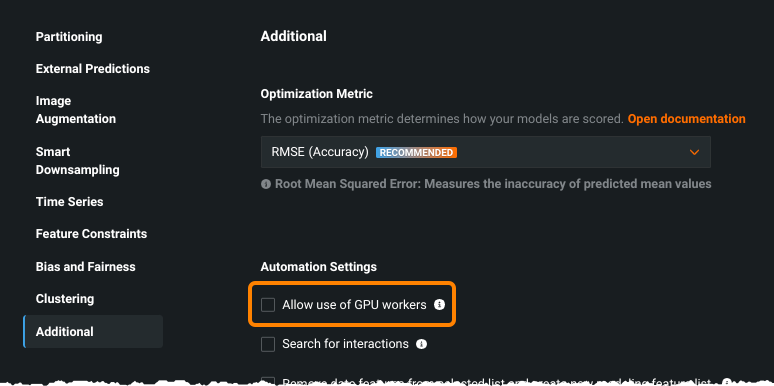

To enable GPU workers, open advanced options and select the Additional tab.

Check the Allow use of GPU workers box, which controls whether GPU workers will be used for the project. Use depends on whether the appropriate blueprints are available and whether GPU workers are available. When this setting is enabled, Autopilot will always prefer to run a GPU variant over a CPU variant. For example, Comprehensive mode will not run any CPU variant.

GPU modeling process¶

When a blueprint is scheduled for training, either manually or through Autopilot, and GPU capabilities are enabled:

-

DataRobot checks the blueprints to determine which models can be trained on GPU workers and which models can only be trained on CPU workers.

-

Models flagged for GPU workers are sent to the GPU worker queue; all other models are sent to the CPU worker queue.

-

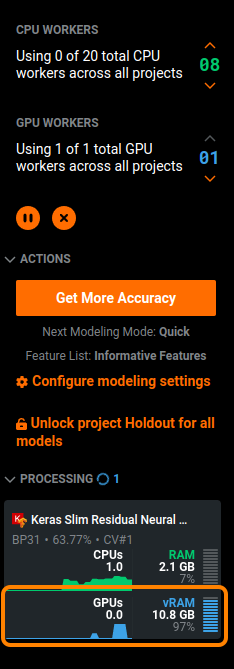

The Worker Queue shows the number of CPUs and GPUs being used for training, as well as the total number available. You can add GPUs, if available, to increase queue processing.

-

Once a models's training is complete, it appears on the Leaderboard. A badge indicates whether the model was trained on GPUs.

-

If you retrain a model, DataRobot applies the same logic as for the original training job.

Feature considerations¶

-

Due to the inherent differences in implementation of floating point arithmetic on CPUs and GPUs, using a GPU trained model in environments without a GPU may lead to inconsistencies. Inconsistencies will vary depending on model and dataset, but will likely be insignificant.

-

Training on GPUs can be non-deterministic. It is possible that training the same model on the same partition results in a slightly different model, scoring differently on the test set.

-

GPUs are only used for training; they are not used for prediction or insights computation.

-

There is no GPU support for custom tasks or custom models.